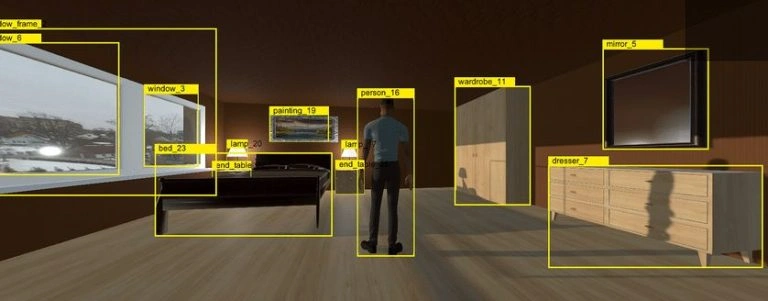

Bounding boxes are rectangular region labels used for computer vision (CV) tasks.

In supervised machine learning (ML), an object detection model uses bounding box labels to learn about the contents of an image.

The bounding box labels objects or features of interest to the model, whether a person, traffic sign, vehicle, or virtually anything else.

The model learns about the content inside the bounding box to predict the presence of similar objects when exposed to new unseen data.

Bounding boxes are defined by two points, usually the top-left and bottom-right corners of the box. These simple rectangular labels are widely used for object detection and localization tasks, providing a straightforward way to describe the position and size of objects in an image.

This is everything you need to know about bounding boxes.

Uses of Bounding Boxes

Bounding boxes are used to label data for computer vision tasks, including:

Object Detection: Bounding boxes identify and localize objects within an image, such as detecting pedestrians, cars, and animals. They represent object locations and are compatible with many machine-learning algorithms. Object detection models like YOLO learn from a labeled dataset to predict bounding boxes on new, unseen data.

Object Tracking: Similarly, in video models, bounding boxes are utilized to track the movement and position of objects over time, enabling applications like video surveillance, sports analytics, and autonomous vehicles, among others.

It’s worth highlighting that object detection differs from other CV tasks like segmentation.

Here, the goal is to identify objects within the image. There’s no explicit need to understand the boundaries between images. Read our guide to computer vision for a comparison.

Best Practices for Labeling Bounding Boxes

Every supervised computer vision task requires an annotated training dataset.

Annotations are applied to objects within images, which act as training data. The model learns about the contents of bounding boxes to predict bounding boxes on new, unseen data. In other words, labeled bounding boxes supply the model with a ‘target’ to learn from.

Labeling bounding boxes accurately is essential for training high-performing object detection models.

Aya Data’s expertise in bounding boxes is proven by the many successful ML projects we’ve been involved in.

Here are some best practices to follow when annotating images with bounding boxes:

1: Consistent Labeling

It’s crucial to ensure that the same object class is consistently labeled with the same name across all images in the dataset.

Consistency is essential for training models that can accurately recognize objects.

2: Tight Boxes

Labelers must label bounding boxes tightly around the object, ensuring that the box’s edges touch the object’s boundaries without cutting off any part of it.

Tight bounding boxes provide better localization information for learning algorithms.

3: Overlapping Boxes

In cases where objects are overlapping or occluded, drawing individual bounding boxes around each visible object is usually recommended.

Polygon annotations may be a better choice for images with high proportions of overlapping or occluded objects.

In any case, it’s important to avoid drawing a single box around the entire group of objects, as this will make it difficult for the model to distinguish between them.

4: Occlusion and Truncation

Similarly, for partially visible or truncated objects, it’s often recommended to draw bounding boxes around the visible parts only. However, this does vary from model to model.

This provides more accurate localization information and helps the model handle occlusion and truncation.

5: Label All Instances

Ensure that every instance of the object class is labeled in each image, even if the instances are small or partially visible.

This consistency helps the model learn to detect objects under various conditions that mimic reality.

6: Leverage Modern Labeling Tools

Use an efficient and user-friendly labeling tool to annotate bounding boxes.

Many tools provide features like automatic box snapping, zooming, and keyboard shortcuts, making the labeling process efficient and more accurate.

Bounding Boxes Vs. Segmentation

Bounding boxes and image segmentation labeling techniques, such as instance segmentation and semantic segmentation, serve different purposes in computer vision tasks.

Choosing one over the other depends on the specific problem you are trying to solve.

Here are some scenarios when you might prefer bounding boxes over semantic segmentation:

1: Object Detection and Localization: If the primary goal is to detect and localize objects in an image, bounding boxes are more suitable, as they provide a simple and efficient representation of an object’s location and extent.

2: Faster Annotation: Bounding boxes are easier and quicker to label than semantic segmentation, which requires pixel-level annotation. This can be a significant advantage when creating large datasets.

3: Computational Efficiency: Object detection models that use bounding boxes, such as YOLO and Faster R-CNN, often have lower computational requirements than semantic segmentation models. Bounding box-based models can be faster and more memory-efficient, making them more suitable for real-time applications or deployment on devices with limited resources

4: Less Detailed Information Required: If your application does not require detailed information about object boundaries or separation between objects of the same class, bounding boxes can be sufficient. For instance, in applications like pedestrian or vehicle detection, knowing the approximate location and size of the objects might be enough.

5: Model Simplicity: Bounding box-based models can be simpler to implement and train compared to semantic segmentation models, which often require more complex architectures and training strategies.

Segmentation

On the other hand, you might prefer semantic segmentation in scenarios where:

1: Detailed Object Boundaries: You need precise information about object boundaries, for example, in medical image analysis or satellite imagery, where accurate delineation of structures is crucial.

2: Pixel-Level Classification: Your application requires classifying each pixel in the image, such as in land cover classification or road segmentation tasks.

3: Instance Separation: If you need to distinguish and separate instances of the same object class, instance segmentation (a variant of semantic segmentation) is more suitable, as bounding boxes alone cannot differentiate between overlapping objects of the same class.

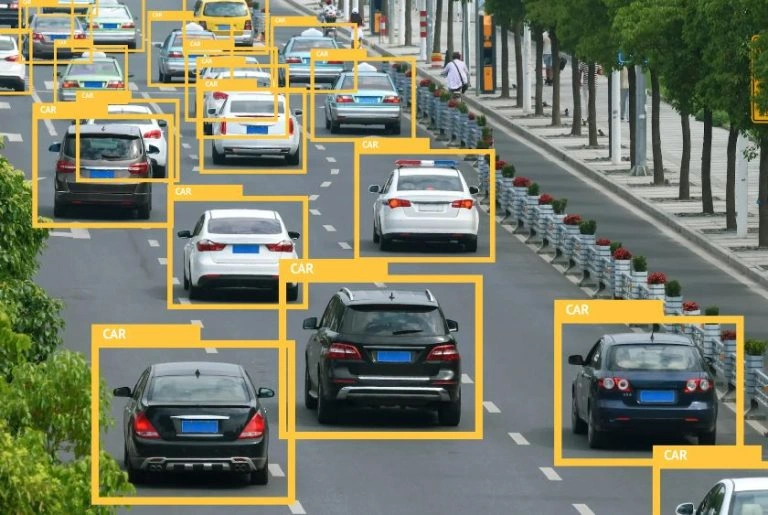

Case Study: Vehicle Detection

Vehicle detection and tracking are essential components of traffic monitoring systems.

Bounding box annotation is often used to annotate and detect vehicles in traffic surveillance images or videos. Simple traffic monitoring typically ideal for object detection, as the model’s intent is to identify objects (cars, trucks, etc).

Moreover, object detection with bounding boxes is fast and efficient, which makes it suitable for real-time use. For instance, this study published in Applied Intelligence highlights YOLO’s real-time capability, which makes it ideal for traffic monitoring.

1: Data Collection

A large dataset of images and videos must be collected from various traffic surveillance cameras to develop a robust vehicle detection model.

The dataset should include diverse scenarios, such as different weather conditions, lighting situations, and levels of traffic congestion. This variability ensured the model could perform accurately across various conditions.

While there are various open-source datasets available, the suitability of these depends on the application. For example, there may be features absent from the training data – the training data needs to be representative of the intended real-life use of the model.

For example, Aya Data helped a client augment their street scene dataset with electric scooters, a relatively new addition to the road and absent from many training datasets. Read the case study here.

2: Annotation Process

Bounding boxes are drawn around each vehicle in the images and videos, encapsulating the entire object within a rectangular box.

Although bounding boxes do not capture the exact shape of the object, they are sufficient for the task of vehicle detection, where precise object boundaries are not critical.

3: Model Training

Once the dataset is annotated with bounding boxes, it’s split into training and validation subsets.

Next, a deep learning model, specifically a convolutional neural network (CNN), is trained using the annotated dataset. The CNN architecture was designed to detect and classify vehicles, such as cars, trucks, buses, and motorcycles, within the images and videos.

4: Evaluation and Results

The performance of the trained model was evaluated using standard metrics, such as precision, recall, and F1-score.

Evaluating Bounding Box Predictions

Once the model is trained, it’s necessary to evaluate the quality of its bounding box predictions.

There are dozens of ways to do this, but here are two main methods:

1: Intersection over Union (IoU): IoU measures the overlap between the predicted bounding box and the ground truth box, normalized by their union. IoU values range from 0 to 1, with higher values indicating better localization accuracy.

2: Precision and Recall: Precision measures the proportion of true positive predictions (correct bounding boxes) out of all positive predictions, while recall measures the proportion of true positive predictions out of all actual positive instances. The F1-score is the harmonic mean of precision and recall. It combines both metrics into a single value, providing a balanced evaluation of the model’s performance. A higher F1-score indicates better performance.

3: Average Precision (AP): AP is the average of precision values calculated at different recall levels. It is a popular metric used to evaluate the performance of object detection models, as it considers both precision and recall.

4: Mean Average Precision (mAP): mAP is the mean of the average precision values calculated for each class in a dataset. A single value summarizes the model’s performance across all classes. A higher mAP score indicates better performance.

Also Asked Headlines

What does bounding box mean in AI?

In AI, especially in computer vision, a bounding box is a rectangular box that outlines the location of an object within an image, used for object detection tasks.

How does bounding box regression work?

Bounding box regression adjusts the initial bounding boxes predicted by a model to more accurately fit the objects by minimizing the error between predicted and actual object boundaries through gradient descent

Is bounding box a segmentation?

No, a bounding box is a form of object localization, not segmentation. Segmentation involves pixel-level classification within the box, providing a more detailed outline of the object’s shape.

Summary: Bounding Boxes in Computer Vision

Bounding boxes are rectangular region annotations used for supervised computer vision (CV).

The bounding box annotates objects within the image, such as anything from a person to a plant or vehicle. The supervised model learns about the content inside the bounding box to predict objects when exposed to unseen data.

Bounding boxes are defined by two points, usually the top-left and bottom-right corners of the box.

They remain the cornerstone of labeling for object detection, vital to many CV applications.

To learn about how Aya Data can assist you with bounding box annotation, contact us here.