The progress of AR and VR has been steady rather than explosive as many predicted at the turn of the millennium.

However, while most people have not yet set foot inside the Metaverse, we’ve witnessed a host of revolutionary AR and VR technologies that sit somewhat ‘behind the scenes’ of our everyday lives.

Studies show that investment in AR and VR is unrelenting, and developers and manufacturers are slowly solving some of the practical barriers to AR and VR adoption with the help of AI, ML, and data science.

This article investigates how AI/ML supports a new generation of AR/VR technologies.

The Integration of AR/VR and AI

Augmented and virtual reality (AR/VR, collectively sometimes referred to as extended reality, or XR), and artificial intelligence (AI) are strong allies.

The resource-heavy technologies required for AR/VR benefit from sophisticated, scalable processing computing techniques. AI and ML enable those techniques.

AI excels at tracking objects, creating detailed models of the 3D world, and making decisions using complex data, which all assist in the creation of next-gen AR/VR.

Case Study: Google Lens

Google Lens uses computer vision technology to analyze the content of images.

Using Google Lens, users can supply images with text, objects, landmarks, plants, etc, and the app provides relevant information, such as the name of a building, plant, statue, plant, etc. Google Lens also enables advanced image searches.

At a glance, Google Lens seems simple, but there’s a lot going on behind the scenes.

Google Lens is trained on a large dataset of images and their associated labels, allowing it to learn the features and patterns characteristic of different objects, text, and landmarks.

First, Google Lens identifies and extracts relevant information before using convolutional neural networks (CNNs) to analyze the image and identify objects or recurrent neural networks (RNNs) to recognize text. Then, as users interact with the app and provide ongoing feedback, the system learns from the user’s preferences and behavior and adapts its results accordingly.

Google Lens couldn’t exist without AI/ML, which links the AR sensor (a camera) with a back-end of models that help analyze images and search for related information from the internet.

The Future of AR and VR

Research into AR and VR technologies has fuelled development in many other areas, such as building driverless vehicles.

The goal is to bridge the sensory world and digital world as seamlessly as possible, ideally to the point that we can barely distinguish between them.

However, building advanced AR and VR poses a pertinent question: how do we bridge the sensory world – the world we sense – with the digital world? And how do we achieve this in real-time?

Science fiction imagines robots that understand sensory data with practically zero latency, much the same as biological beings. But in reality, this is exceedingly complex, especially when we ramp up the complexity of the AI models involved.

A complex model with high accuracy generally incurs high prediction latency when deployed in real-time systems – Tech Target

AI researchers are trying to solve this with lean models, foveated rendering and other innovative techniques designed to boost processing while slashing latency. However, it turns out that humans are incredibly efficient at noticing latencies of just milliseconds.

In fact, studies show that, if AR and VR technologies are just a few milliseconds slower than what we’re used to – e.g. our nervous system’s inherent latency – then we can develop headaches, nausea and other symptoms collectively known as ‘cybersickness’.

Cybersickness is a type of motion sickness induced by exposure to AR and VR.

So, how do we tighten the gap between complex sensory data and digital systems?

Bridging Real and Virtual Environments

To build next-gen AR, VR and related technologies, we need to find efficient methods of ingesting complex sensory data and mapping it to software at low latency.

Ideally, sensors and the models behind them must match or exceed the speed of the human nervous system. Studies show that humans are exceptionally good at noticing small latency issues, or lag. Building AI systems that match or exceed our ability to understand complex multi-sensory data is an important milestone for AR, VR and other real-time AIs like self-driving cars that need to react to stimuli as quickly as biological systems.

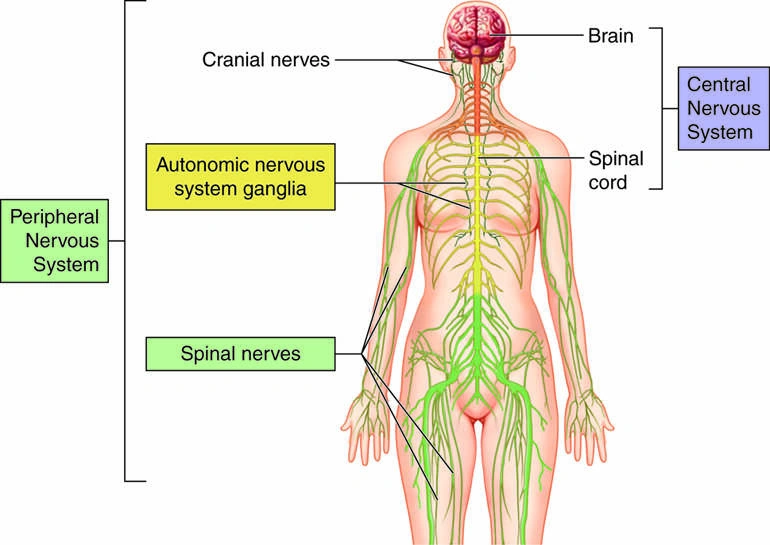

Our nervous system bridges our internal experiences with external stimuli, and it’s incredibly efficient, with processing times of around 3ms for hearing to 50ms for sight and touch. Shortly after a stimulus enters the nervous system, the human brain processes that information to identify an image, understand a noise, determine a scent, etc.

The human nervous system is exceptional at handling multi-sensory data at low latency. Reaction times across nature are even better than our own – a hummingbird’s wings flap 1000 times a minute and a fly can evade a swatter travelling at 100mph. AI and ML are extensively modeled on biological systems because they’re quite simply the most impressive systems we know to exist.

For example, as you read this passage, you’re understanding the text, but can also possibly hear, smell, feel and taste other stimuli. Since reading that last line, your nervous system and brain is already hard at work focusing on those stimuli. It didn’t take long – just a few milliseconds.

Studies show that data moves from the peripheral nervous system to the central nervous system in as little as 3ms. The brain then processes data in two stages:

- Unconscious stage: Here, the brain processes features, e.g., the color or shape of an object. The nervous system feeds the brain data at a much faster rate than it can process, and initially, there’s virtually no conscious perception. In other words, the brain is bombarded with a stream of information that it begins to unravel unconsciously before we become conscious of it.

- Conscious stage: In the conscious stage, the brain renders features to produce a complete conscious “picture.” At this point, we become aware of the stimulus.

This entire process can take up to 400ms, which is actually quite a long time, but in this window, we can consciously render an extremely complex stream of sensory data across all five senses.

The question is, how do we build systems that can react to the physical world with similar speed and accuracy as a biological brain?

Narrowing the Gap Between Stimulus and Reaction

Developing methods for processing ultra-high-bandwidth data from sophisticated sensors at ultra-low latency is a priority for AR, VR and other technologies like driverless cars and UAVs.

Imagine the process of catching a ball. In the short time it takes for the human brain to register the ball flying at us, we’re already moving our hand to catch or block it. This process involves a phenomenal quantity of data processed in mere milliseconds.

To us, it’s innate, but it wasn’t until around 2011 that robots were trained to effectively catch balls.

Now, raise the stakes and imagine the ball is actually a person on a bike hurtling towards a driverless car.

In that situation, we’re trusting the driverless car to:

- See the biker

- Understand what the biker is

- Decide that, at current trajectory, the biker will collide with the car

- Act rapidly, to avoid a collision

Currently, building AI to make these decisions in the required timeframe is an issue. A recent study into driverless car safety published in Nature highlights autonomous vehicle technologies largely fall short of what’s required to perceive high-speed or fast-moving threats.

In an article by the Guardian, Matthew Avery from Thatcham Research said that 80% of tasks completed by driverless cars is easy, but the remaining 20% is difficult. The last 10% is really difficult.

The last 10% is really difficult…That’s when you’ve got, you know, a cow standing in the middle of the road that doesn’t want to move – Matthew Avery tells the Guardian.

New technologies like LIDAR and neuromorphic imaging are changing this, and “tightly coupled” sensing and AI/ML technologies are equipping technology with state-of-the-art imaging combined with low-latency processing. But it’s a winding road ahead.

Neuromorphic Cameras and Foveated Rendering

One of the key factors creating latency in AR/VR systems is rendering practically useless data.

Humans only sense what’s around us. We know there’s a world beyond what we ourselves can sense, but we don’t have to render it until we sense it.

We can apply this concept to AR and VR by creating systems that are locally reactive to our own senses. Video games already employ this, e.g. they only render the environment that the character can ‘see’ in the game.

The tricky thing for AR/VR is, software systems need to track our eye movements to understand precisely what we’re looking at, and our eyes move extremely quickly. We can change our direction of vision several times in one second, with each rapid movement, called a saccade, lasting around 50ms.

Neuromorphic cameras are modeled on the human eye and offer a potential solution for tracking our eye’s rapid movement. This unlocks a new generation of AR/VR technologies that use advanced sensing technologies to track human eye movement and render precisely the environment we’re looking at.

What are Neuromorphic Cameras?

Unlike typical digital cameras that use shutters, neuromorphic cameras react to local changes in brightness, much the same as the human eye – hence the name. Neuromorphic cameras are excellent at tracking super-fast movements, such as the movement of the human eye.

By tracking eye movement, AR/VR software can render the digital images that users are precisely looking at – otherwise known as foveated rendering.

Combining neuromorphic cameras with VR helps lower the rendering workload, solving latency bottlenecks. Resources are used more efficiently when directed to the foveal field of vision and not the blend or peripheral regions, as above.

Instead of rendering an entire environment all at once, future AR/VR technology will likely render only the portion the user is looking at – the only portion that matters.

AI, AR, and VR Uses Cases

While a new generation of AR/VR is likely imminent, AI, AR, and VR are already combining forces to enable break-through technologies.

Here are some use cases for AI combined with AR/VR:

1: Manufacturing

In manufacturing and industrial settings, businesses combine AI with AR/VR to improve maintenance processes and training.

By using image recognition and deep learning technologies, AI can help engineers identify issues with components and equipment. Issues detected by the AI can prompt instructions and guidance.

There are many excellent examples of this, like an AR app that identifies faults with circuit boards and Ford’s FIVE (Ford Immersive Vehicle Environment) visual inspecting system. which is almost 8 years old.

2: Retail

Retail companies are utilizing AI-powered AR/VR shopping experiences that use pop-up coupons, product info and other tips that appear in a digital environment while a shopper is navigating the aisles of a store.

Virtual showrooms and fitting rooms are becoming ubiquitous and are considerably more effective than they once were.

Retailers are pouring investment into their own AR/VR tools that immerse customers in interactive retail environments like IKEA’s VR showroom.

Similarly, in architecture, it’s now possible to digitally design rooms or buildings and experience them through VR before construction begins.

3: Military and Emergency Services

In the military and emergency services, AI-powered VR is being used to guide people through simulated hazardous environments before deployment into the real thing.

Simulations using AR and ‘digital twins’ – digital copies of the real world – enable experimentation in safe, non-destructible environments.

For example, space agencies like NASA simulate space and extra-terrestrial environments using complex datasets collected through digital modeling and empirical measurements.

5: Entertainment and Gaming

Entertainment and gaming have a long history of AR/VR tech innovation. Processing ultra-high bandwidth environments with ultra-low latency is a key issue in building the next generations of high-definition AR/VR games, but AI-powered foveated rendering is changing that.

The Oculus Rift was among the first VR headsets to transition immersive gaming from sci-fi to reality. Meta’s headsets are pushing VR to the mass-market.

While AR/VR gaming is yet to take off, accessible fully interactive and high-definition Metaverse-like environments are likely imminent.

6: Security and Intelligence

Smart glasses are already rolling out to some police forces and security teams, allowing them to identify individuals via facial recognition in a similar way to car number plates.

Employing facial recognition in high-stakes areas such as law enforcement is proving difficult, but such models will no-doubt evolve to become more accurate and reliable.

Data Labeling For AR and VR

In a general sense, in VR, the environment is digitally rendered from scratch. While VR might incorporate elements of AR, e.g. by recreating parts of the real world inside the virtual world via the use of cameras, the environment is largely controlled in the box.

On the other hand, AR environments ingest data from the real world, such as a street, manufacturing plant, or shopping aisle. AR requires data from various sensors such as LIDAR, video, images, audio, etc.

The AI and ML models in the back-end of AR applications need to be taught how to identify and analyze incoming data, which is why data labeling and annotation are essential.

For example, you can train an ML model to locate a road’s boundaries or identify characters written on a menu for automatic translation. To create these models, human-labeled training data is fed into the models. Similarly, AR applications that read text require AI-enabled optical character recognition (OCR) to quickly pull features from written text and provide on-demand insights.

Data labeling for AR/VR is crucial for building new-generation technologies.

Specialist data labeling teams like Aya Data produce high-quality datasets for cutting-edge applications. Our annotators work with complex data such as LIDAR, video, audio, and text and can supply you with the datasets you need to train sophisticated AR and VR models.

Contact us today to discover how we can support your data labeling projects.