Data is one of the most valuable resources on the planet today.

The World Economic Forum estimated that his seemingly intangible resource was worth some $3 trillion in 2017. The machine learning market specifically is expected to grow from $22.17 billion in 2022 to $209 billion in 2029.

While structured data contained in tables and databases have been around for decades, the realms of data science and machine learning are evolving to take advantage of unstructured data such as images, video, text, and audio. Unstructured data is not modeled or defined by preset schema.

Here, we’re going to discuss how to collect and prepare one of the main types of unstructured data for machine learning projects: visual data.

Unstructured Data: Image and Video

The vast majority of the data contained in the world is unstructured (around 90% by some estimations).

Unstructured data lives in digital systems in the form of text, images, video, and audio, and humans take our understanding of it for granted. Whether a video, image, audio clip or text, interpreting unstructured data is innate.

One of the goals of machine learning is to transfer this human intuition onto machines so we can teach them to understand unstructured data. This way, we can combine machines’ computational power with human-like intelligence.

Computer Vision and Neural Networking

As neural networks take center stage in AI and ML, modeling computational applications to solve unstructured data problems has become easier.

With neural networks and deep learning, it’s no longer necessary to build complex and convoluted traditional machine learning algorithms. Instead, it’s possible to show the model a large, labeled dataset and have it extract the necessary features with sufficient accuracy to replicate results on unseen data.

One of the most important forms of unstructured data for machine learning is image data.

This is a guide to collecting data for computer vision projects.

Computer Vision Use Cases

1: Computer Vision For Autonomous Vehicles

Unmanned drones and satellites armed with computer vision technology collect and analyze everything from climate information to deforestation and changing land use for research, science, and conservation purposes.

Cameras and audio sensors have to capture information from all directions and feed the data to advanced AIs, processing data in real-time.

Autonomous vehicles are not a new concept, but the complexities of building them have not long been realized, especially as modern urban environments are constantly changing. For example, Aya Data assisted a client in responding to the rise of eScooters on the road – an unforeseen edge case in their CV training workflow.

2: Computer Vision For Facial Recognition

Matching faces to databases has become much simpler thanks to computer vision. By extracting features from new images and matching those to database images, it’s possible to auto-check one’s facial data to authenticate identities.

Law enforcement utilizes facial recognition technology to identify potential criminals from images and video – this process is being wholly or partially automated. For example, Aya Data labeled 5,000 hours of video to identify shoplifters from CCTV footage. The client used the footage to train a prior warning model that automatically detected shoplifting as it happened.

3: Computer Vision in AR and VR

Computer vision plays a crucial role in developing AR and VR applications. For example, cameras can detect the environment around them to overlay images onto digital screens, considering the size and dimensions of a 3D space.

AR and VR are used for the purposes of unmanned vehicle control, autonomous robotics, and next-gen gaming.

4: Computer Vision In Healthcare

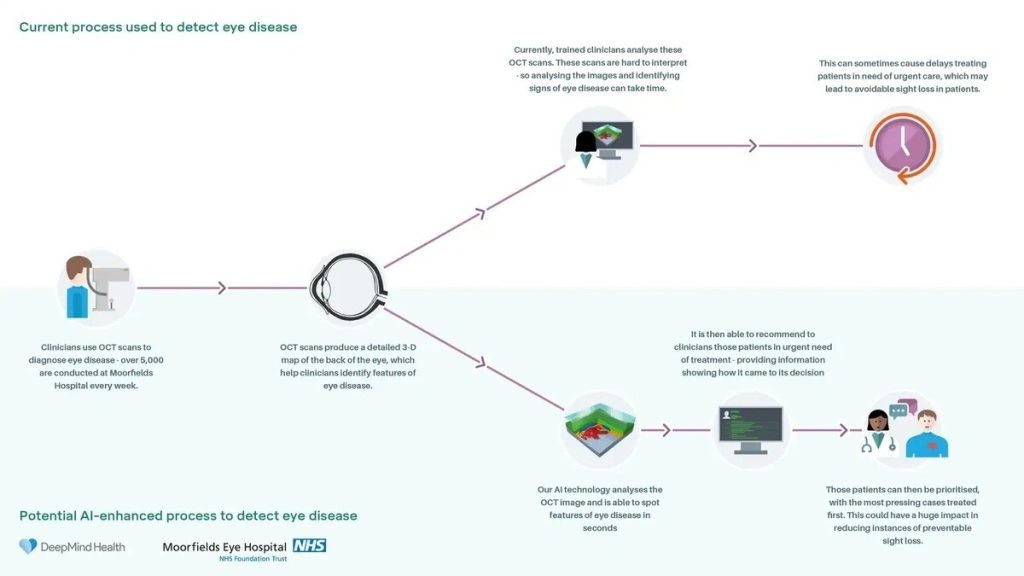

Computer vision has been transformative in healthcare. For example, a CV model built in Google DeepMind can read occipital coherence tomography (OCT) scans and other medical images to diagnose some 50 eye diseases accurately.

DeepMind computer vision in healthcare

Computer vision algorithms negate human error in health-critical decision-making and allow skilled medical professionals to concentrate on treatment rather than time-consuming diagnostics.

Collecting Visual Data for Machine Learning Projects

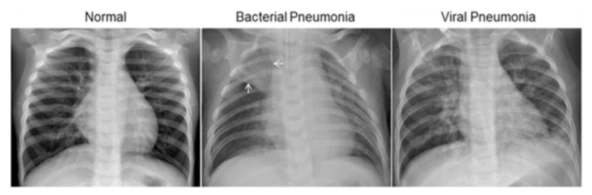

Visual data is required to train any supervised computer vision model. Images and videos are a form of unstructured data that are ‘shown’ to algorithms to train the model.

To train computer vision models, images or videos need to be labeled or annotated to instruct algorithms to identify specific features in an image.

Computer vision revolves around pattern recognition. The relationship between each component of an image – including everything from the colors to the pixels themselves – can be interpreted by CV algorithms.

Labels form the basis of supervised training for computer vision – they provide the pattern to recognize.

So how do you go about locating visual data for machine learning projects?

There are three main approaches:

- Buy or find a relevant dataset online. This might be pre-labeled or unlabeled.

- Build a custom dataset.

- Augment existing data you have or can acquire, turning raw or processed data into a dataset for ML.

Option 1: Open Source Datasets

Open source datasets have proliferated since the early-2000s. We’ve compiled a list of 18 datasets for machine learning projects here. Open-source data is excellent for education, experimentation, and proof of concept. It can also guide the collection of new data.

While there is more open-source data available than ever, a good deal of it is still generic. Furthermore, most open-source sets are just ‘good enough’ and may fall short of the mark when training important ML models.

Some of the main options are:

- Amazon Datasets: Stored in S3, great selection of datasets for rapid deployment in AWS.

- Awesome Public Datasets: GitHub’s main dataset repository. Covers virtually every industry and sector.

- Dataset Search by Google: Google’s own dataset search tool which enables users to search by keyword, dataset type, and many other filters.

- Kaggle: Colossal open dataset search engine and database.

- OpenML: Described as a ‘global machine learning lab’. Contains access to everything from collaborative projects to algorithms and datasets.

- UCI: Machine Learning Repository: UCI’s own ML repository. Excellent for training and educational purposes.

- Visual Data Discovery: A well-categorized search engine tool for computer vision only.

Option 2: Creating Custom Datasets

Creating custom datasets is often necessary when inventing models that require complete control and visibility over the data used.

Moreover, open-source datasets cover most mainstream cases but naturally fail to cater to every ML project. Most of the original mid-2000s open-source sets are aging rapidly, diminishing their usefulness in modern ML.

In short, many ML projects rely on the creation of custom data to solve new and novel problems.

To create a custom dataset for computer vision, you’ll need to obtain real images and/or video from the environment. This requires a different approach to inducting structured data from a database via ETL pipelines or similar. Computer vision often involves deploying photographers and videographers into the real world.

Aya Data has deployed specialist machine learning practitioners to collect real-world data in many sectors and industries. The creation of custom datasets for computer vision projects is necessary for creating unique solutions for problems where datasets do not currently exist. Data collected in this way becomes an asset to an organization or business, and guarantees ownership and usage rights, providing privacy rules and regulations are followed.

Data and Feature Distribution

Datasets are created to address a problem that data engineers and scientists can solve via machine learning (in this case, computer vision). By this stage, that problem should be well-defined.

Before collecting data, it’s crucial to determine the rough distribution of data required in the dataset. For example, consider an object detection model designed to detect the species of birds landing on a bird table. By collecting one week’s worth of data, the model may be able to generalize well to birds present in that one-week window.

But what if, for that week only, a flock of starlings dominates the bird table at the expense of other birds?

In such situations, it’s wise to have a rough idea of the distribution of birds in that locality at that time of year to ensure that the dataset features enough examples of each species of bird the model needs to detect. This type of problem is common when creating CV datasets – transient or ephemeral fluctuations in data threaten to warp a dataset’s representation.

Check Your Images Carefully

Photography is a crucial but often-overlooked component of creating computer vision datasets. The adage “rubbish in, rubbish out” rings true here – the quality of the initial data is paramount.

This poses practical questions from the get-go; who will take the photos? Will photographing the subject matter be a simple task? What are the challenges?

1: Source Material Should Be Consistent

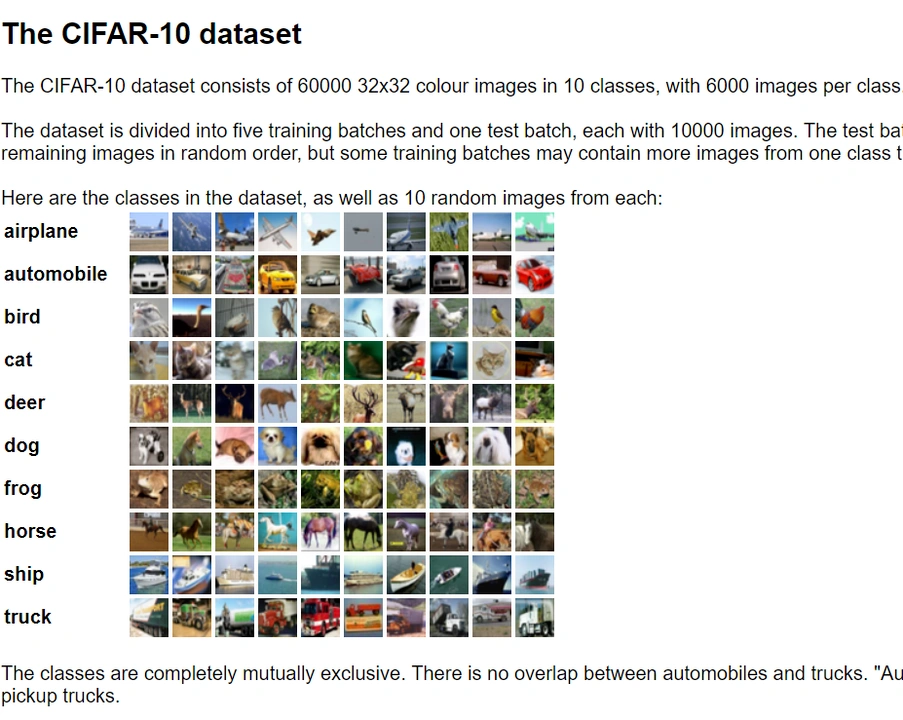

Image datasets need to be consistent in size and format. While neural networks work with practically any image in any size and format, there’s little sense in mixing small 64 x 64 images with gargantuan ultra-HD images. Moreover, when it comes to batch processing the images, it’s much easier if they’re all the same size. It’s best to keep shots consistent. Preferably, use the same cameras with the same settings, resolution, file format, etc.

For example, the CIFAR-10 dataset (seen below) contains low-res 32×32 images. Mixing these with high-res images would likely not be sensible.

2: Check For Blur

Blur is seldom useful in image datasets. While blur is a natural phenomenon, it doesn’t contain any particularly useful data while also obscuring parts of the image.

Blurry images can be routed out in image labeling tools or with a Laplacian function in OpenCV. Any images deemed blurry above a certain threshold can be removed.

3: Color Space and Distribution

Objects appear as different colors depending on how light interacts with them. For example, a plant in bright sunlight will appear brighter than the same species of plant photographed in the shade. A classification model might consider those two plants different species if there is not sufficient data to classify the plants in a range of lighting.

Ensuring images are taken in similar lighting from similar angles is a practical way to avoid this problem. Otherwise, it’s possible to extract color values from images and label colors based on specific value ranges.

4: Check For Class Balance

When working with pre-made or synthetic datasets, it’s tempting to assume that all classes are balanced to provide a dataset representative of the ground truth. This isn’t always the case – class balance is equally (or more) important when creating custom datasets.

For example, what do you do when you realize you have four times more data for class A than class B? Ideally, you’d increase the quantity of B to meet A, or less ideally, you’d reduce the quantity of A until it matches B.

Alternatively, you could bolster your dataset using your existing dataset, also called data augmentation. See below.

Option 3: Augment or Enrich Existing Data

Data-rich or data-mature businesses and organizations may already have large data reserves to draw on for their machine learning initiatives. This pre-existing data can be processed for use in supervised model training.

For example, Aya Data worked with a client to label shoplifting events from some 5,000 hours of video surveillance footage. Here, the data was already present and needed to be processed into a dataset for machine learning.

Many businesses and organizations already collect visual information in the form of CCTV and video footage – Airbus utilizes video footage from their aircraft to train autonomous aviation technologies.

Data Augmentation for Image Data

Data augmentation enables ML teams to strengthen and increase their datasets without sourcing or creating new data. It’s possible to effectively increase the number of image samples in a dataset by mirroring, flipping, cropping, and combining existing images. Noise and other artifacts help train models to generalize better when subject to partially obscured images in the real-world (e.g. during mist or rain).

The primary data augmentation techniques for image data are:

- Mirroring, cropping, translation, shearing, flipping and zooming: These techniques manipulate the entire image to alter its form and position on the image canvas. Moving images helps avoid positional biases, e.g. if the primary features are always found in the center of the image.

- Combining: Combining different images into new images. For example, single class images of dogs and cats can be collaged against a background to create a new multi-class image.

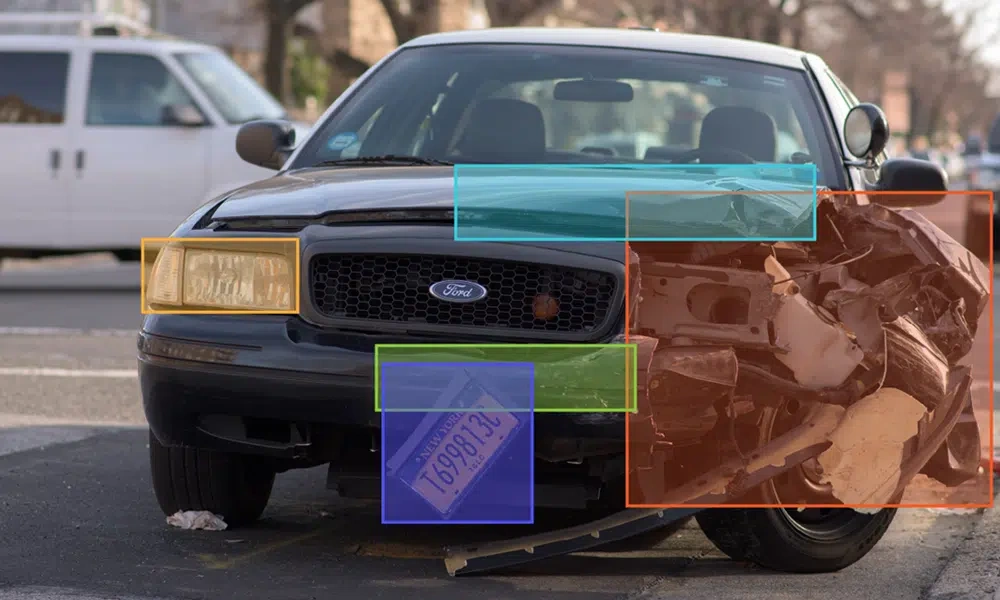

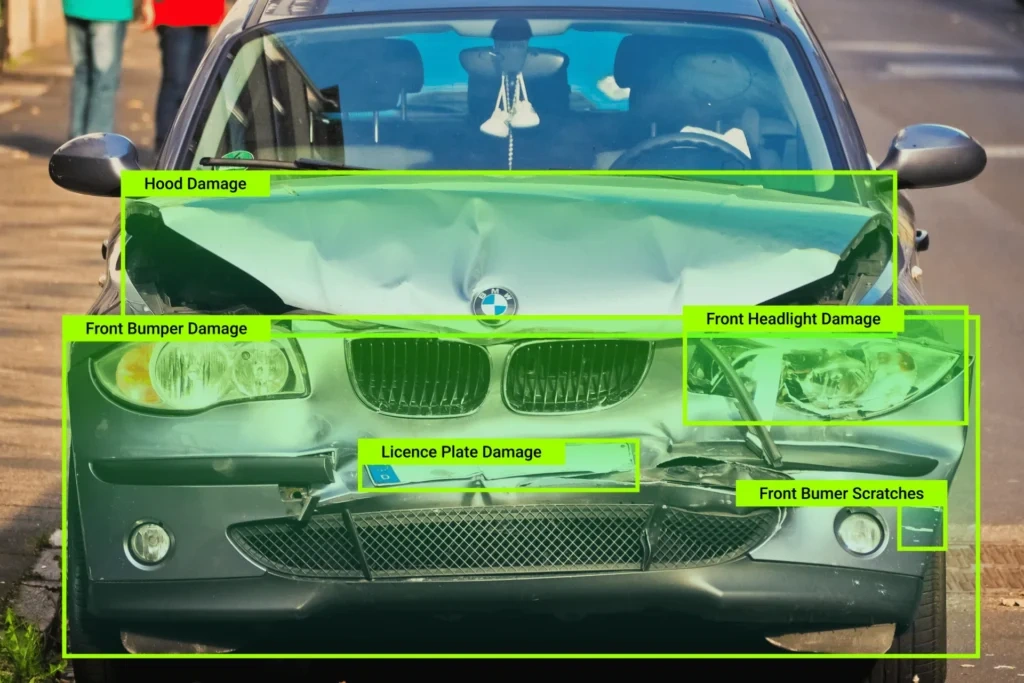

- Color space manipulation: Manipulating colors helps reduce the effects of glare, shadows, overexposure, etc. Sometimes, it’s easier to reduce the color space (or even grayscale an image) in order to simplify the problem. It’s often more efficient to process complex shapes without color. Aya Data created a car damage dataset for an insurance company and filtered out the green and red channels to leave just blue.

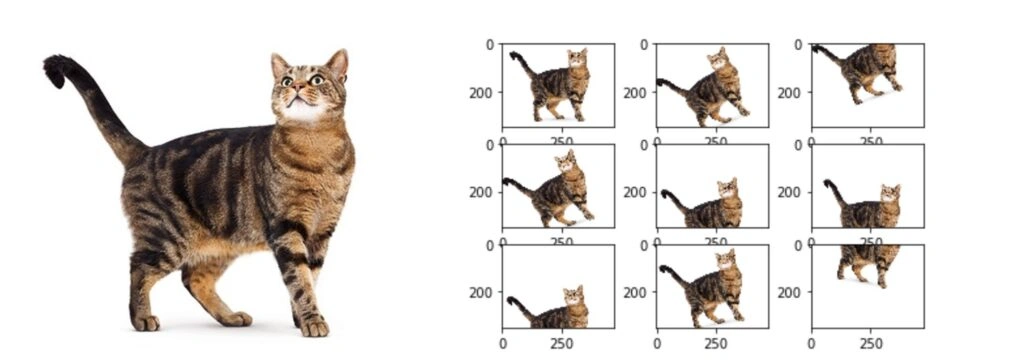

See the below image of a cat. Six new images are effectively generated from the single left hand image. While the new images are arguably inferior to entirely new images of a different cat, or even the same cat, this is still a valuable way to balance dataset class imbalance. Manipulating images in this way allows teams to multiply their data resources and create augmented datasets from pre-existing assets.

Three Data Augmentation Tools For Computer Vision

In addition to generating new data from existing data, it’s also possible to batch-process images to erase common issues such as overexposure, oversaturation, and high or low contrast. The following tools enable ML teams to pre-process data for CV models:

TensorFlow: TensorFlow is an advanced open-source ML platform. It has many features dedicated to CV pre-processing, including editing image angles, brightness, rescaling, etc. There’s also an image transformer tool that generates new images from existing data.

OpenCV: OpenCV is a Python-based library full of tools for CV modeling. Contains an array of tools for rotating, cropping, scaling, and filtering images.

Scikit Image: Another open-source Python library for processing images. Has a huge range of tools for geometric transformation, color space manipulation, filtering, and analysis.

Summary: How to Source Data For Computer Vision Projects

Collecting data for computer vision projects revolves around three key strategies:

- Download a dataset online, either paid or open-source

- Create a custom dataset

- Augment data you already possess, or can extract from another source

Each data collection method has its place in modern computer vision. Ultimately, the goal is to collect high-quality, accurate data that represents the problem space well.

Issues of bias and representation should guide data collection, especially when relying on pre-made datasets. It’s often necessary to perform some form of data augmentation and enrichment, regardless of whether you’re using pre-made or custom data.