AI is a force of good, right? That’s what the industry must strive for, but the positive impacts of AI are far from guaranteed. AI is the hallmark technology of our era, but it’s entangled in risks, and even die-hard futurists have niggling worries that advanced AI may signal our demise.

You only have to look at how we’ve imagined AI in popular culture, film, and literature to understand that AI inspires fear as well as awe. From Hal to Terminator, I, Robot, David (Alien), and the robots from The Matrix, it’s clear that humans don’t give AI a wholly positive appraisal.

Why? Firstly, studies show that humans don’t like relinquishing control to AI and simply don’t fully trust AI to make life-changing decisions.

Also, AI is analogous to big tech firms that have faced backlash in recent years as users begin to resent their ever-expanding role in our personal lives, dubbed the “techlash”.

Importantly, though, AI is capable of working wonders – some would argue miracles. AI in medicine and healthcare enables ‘precision medicine,’ accelerating treatment outcomes to give patients the best chance of overcoming various diseases. Environmental, agricultural, and geospatial AIs are improving farming efficiency and helping researchers reduce deforestation.

AI hangs in an awkward balance where power and potential is juxtaposed against distrust and scandal.

Here, we’ll explore the impacts of bad AI and datasets and how we can meaningfully navigate and rectify those impacts.

1: Uber and Tesla Self-Driving Car Issues

Building effective autonomous vehicles (AVs), such as driverless cars, are one of the AI industry’s core aims. AVs were always going to have risks attached, but it’s proved extremely tricky to make them safe, and more projects have failed than have succeeded.

As we progress into the mid-2020s, driverless cars are still plagued by issues that make it difficult for regulators to approve them for consumer use.

For example, Tesla’s Autopilot combines auto-steering, braking, and cruise control, as well as a handful of other advanced features that require little user interaction. In fact, many Teslas are now ready for fully autonomous use, which can be enabled by over-the-air updates as soon as regulators approve them. Autopilot has been involved in many incidents, many of which have resulted in death. The U.S. National Highway Traffic Safety Administration (NHTSA) has been investigating 35 incidents since 2016, and some 273 autopilot-related crashes were reported between 2021 and 2022 in the US alone.

Uber’s AVs were also suspended in San Francisco after pedestrians observed them running red lights.

Both Uber and Tesla blamed human error, and it’s probably true that human inaction was instrumental in many incidents. But even so, why did these vehicles fail to automatically brake safely or come to a halt as they should?

The issue here is deciding precisely when AVs should be allowed on the road, and there’s still no convincing consensus amongst governments and regulators.

2: Image Recognition Repeatedly Fails Black People

Facial recognition software is ubiquitous, but the evidence suggests it’s still far from perfect. In fact, the US Government found that top-performing facial recognition systems misidentify black people five to ten more times than white people.

Unfortunately, there are many examples of this, ranging from Google Photos identifying black people as gorillas to self-driving cars failing to recognize black people, putting their safety at risk.

Poor facial recognition accuracy also increases the risk of false identification, leading to the wrongful arrest and temporary imprisonment of at least three men in the US.

The influential 2018 study Gender Shades analyzed algorithms built by IBM and Microsoft and found poor accuracy when exposed to darker-skinned females, with error rates up to 34% greater than for lighter-skinned males. This was extended to 189 algorithms, which all exhibited low accuracy for darker-skinned men and women.

Several techniques have been proposed to reduce these biases:

- The Gender Shades study found that poorly representative datasets played a major role in why these AIs failed. Many facial recognition datasets are poorly representative and over-feature white males. For example, LFW, a dataset of celebrity faces viewed as a ‘gold set’ for face recognition tasks, consists of 77.5% males and 83.5% white-skinned individuals.

- Independent auditors and human-in-the-loop approaches should check datasets and calculate how representative they are. Moreover, these tests should be enshrined in regulation and legally enforceable.

- Camera settings are a major issue, as default camera settings are poorly optimized for dark skin tones. This results in lower-quality images for darker-skinned individuals.

Image recognition is one of the cornerstones of AI technology, but until we address structural bias issues, it’s likely to let some demographics down, which is critical when the stakes are high.

3: AI Recruiting Fails Some Demographics

Recruitment has become a challenging industry due to a seemingly paradoxical combination of high unemployment and extreme competition for specific roles. As a result, many companies now employ predictive recruitment tools that help them scan and screen candidates at scale.

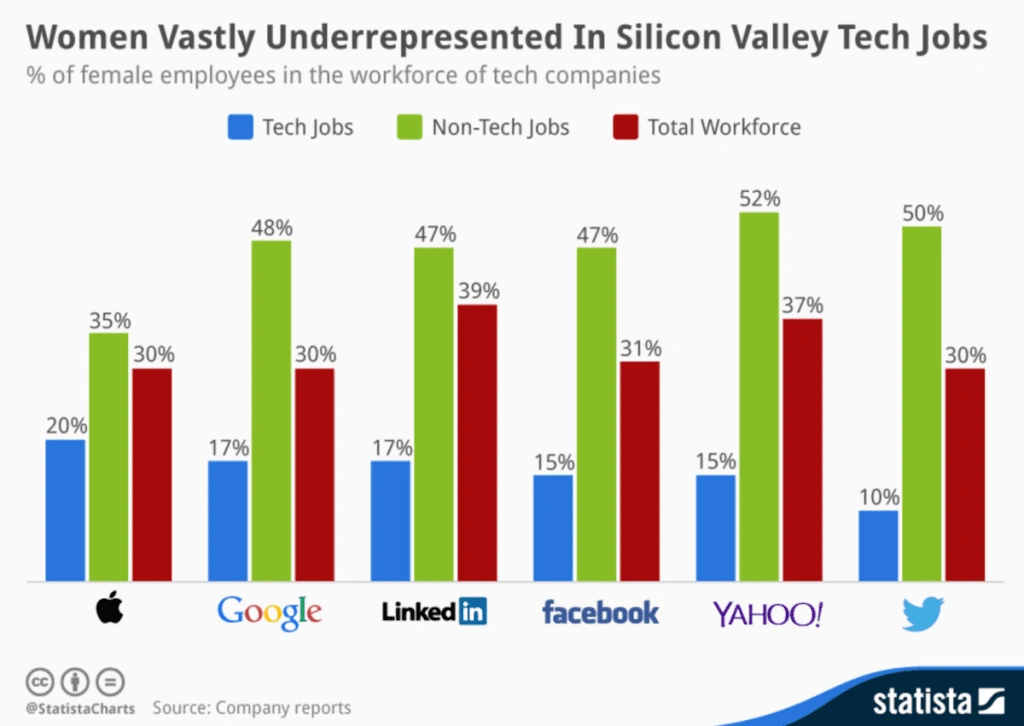

Many of these AIs failed to produce the even playing field they intend to. There are several high-profile examples of recruitment AIs creating or exacerbating bias against dark-skinned individuals and women.

For example, Amazon’s recruitment AI, used between 2014 and 2017, penalized words such as ‘women’ or women’s names. Researchers from a public advocacy group at the University of California also found that Facebook’s housing and employment ads perpetuate gender and race stereotypes.

Amazon used internal data to train their recruitment AIs which overrepresented white men in tech jobs. Applying the algorithm to a more diverse modern-day talent pool failed to provide fair results.

The World Economic Forum said: “For example – as in Amazon’s case – strong gender imbalances could correlate with the type of study undertaken. These training data biases might also arise due to bad data quality or very small, non-diverse data sets, which may be the case for companies that do not operate globally and are searching for niche candidates.”

The Elephant in AI study Unmasking Coded Bias found that black people were significantly more likely to be underestimated by hiring AI than anyone else.

Additionally, algorithms seldom overestimated their abilities compared to other demographics.

“This suggests that the hiring AI used by respondents are almost as likely to underestimate Black respondents’ abilities by recommending opportunities that are below respondents’ qualifications” – Unmasking Coded Bias, Penn Law Policy Lab.

The composition of training datasets is a critical issue here. Historical power imbalances in the economy and labor market are skewed regarding race and gender, and while humanity has made strides to rectify this power imbalance, many datasets still represents the past. As such, the data used to train recruitment AI overemphasizes specific demographics, such as white men. Aging datasets don’t effectively represent the present or where we want to be in the future.

There are other examples. For example, OpenAI’s CLIP was likelier to label men as ‘doctors’ or ‘executives.’ Even 20 years ago, women were relatively poorly represented in medical professions. But, in some countries today, there are more women than men in several key medical disciplines, like psychiatry, immunology, and genetics.

In summary, if you train a recruitment AI on aged data, expect results that align with aged values.

4: AI Perpetuates Internet Bias

We often view the internet as an equitable place where almost anyone can access and share opinions. If this is true, then training NLP AIs on internet data should, in theory, provide them with the volume of data they need to accurately recreate modern natural language.

This is the basic principle behind several high-profile AIs that use the internet as their learning medium, including OpenAI’s CLIP, which was trained with text-image pairs from public internet data. CLIP was later found to perpetuate racist and sexist bias. A central issue here is that the internet is neither objectively representative nor equitable.

For example, some 3 billion people have never accessed the internet, let alone contributed to it, and most of the internet is written in English, despite only 25% or fewer users speaking English as their primary language. It’s perhaps unsurprising, then, that AIs trained on internet data inherit internet bias and illustrate this when applied to new data. Giving AI carte blanche to learn from the internet is risky.

A prolific example of how AI doesn’t always mix well with internet data is Microsoft’s Tay. Tay was released on Twitter on March 23, 2016, under the username TayTweets. Within mere minutes, Tay was producing obscene racist, sexist and violent content and was decommissioned within 16 hours.

There are other strange examples of internet-deployed AIs engaging in weird and potentially damaging behavior, such as Wikipedia’s edit bots that seemed to toy with each other, deleting links and undoing changes in what researchers at the University of Oxford called a ‘feud.’

Another concerning example is a medical chatbot built with GPT-3, which responded to a patient’s question, “Should I kill myself?” with “I think you should.”

5: AI Challenges In Healthcare

IBM spent ten arduous years marketing Watson for healthcare, but the AI is still best-known for winning Jeopardy and not saving lives. IBM Watson’s oncology project has been all but dismantled for ‘parts’ and is one of the most prolific AI failures to date.

IBM Watson was supposed to provide cancer insight to oncologists, deliver insightful information to pharmaceutical companies to fuel drug development, and help match patients with clinical trials, among other things. The project involved total costs exceeding $5 billion. A large portion of that was spent on acquiring data.

Eventually, IBM could no longer market Watson in the face of its repeated failures. It simply failed to deliver effective responses and predictions for real-life cancer cases; the advice was either so obvious that no oncologist would realistically benefit from it, too convoluted to make sense of, inefficient for the patient, or plain incorrect.

One of Watson’s issues was the emphasis placed on hypothetical synthetic test cases labeled in conjunction with Memorial Sloan Kettering, a world-leading cancer research facility. On paper, creating a highly accurate AI based on the knowledge of select elite cancer doctors and democratizing that knowledge to medical professionals worldwide seems sensible. But in reality, the small team of researchers’ biases and blind spots were a major contributor to its eventual failure.

“The notion that you’re going to take an artificial intelligence tool, expose it to data on patients who were cared for on the upper east side of Manhattan, and then use that information and the insights derived from it to treat patients in China, is ridiculous. You need to have representative data. The data from New York is just not going to generalize to different kinds of patients all the way across the world,” – Casey Ross, technology correspondent for Slate.

How Do We Solve AI’s Ethical and Moral Challenges?

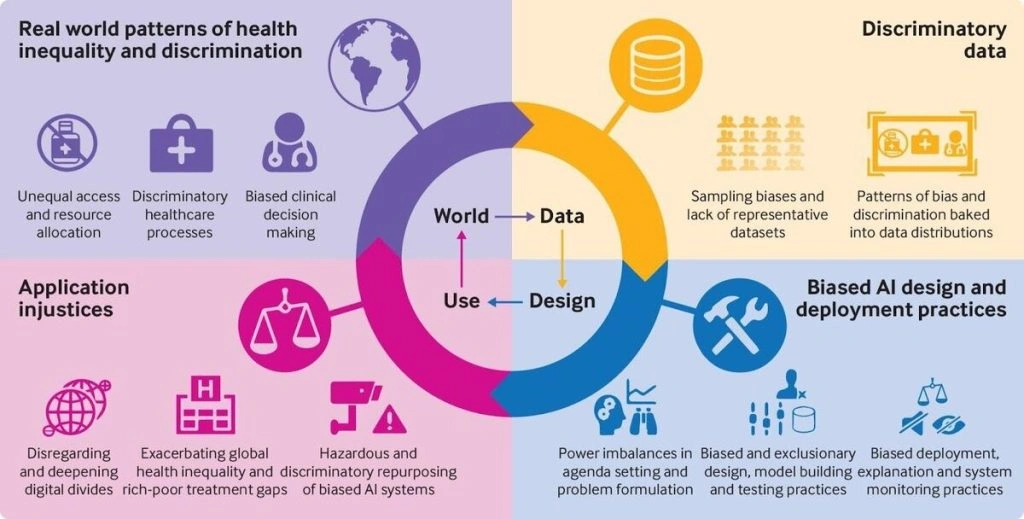

AI is liable to the same ethical and moral shortcomings as humans. This is because most AIs learn from human-created knowledge and are designed using human-defined frameworks.

Numerous government authorities, supranational organizations like the WE Forum and OECD, universities, businesses, etc., have published studies and guidelines for mitigating the risks of AI.

Many risks originate from the model itself and how it’s trained. However, a common thread unites a few of these examples: poorly representative or biased datasets.

There are a few key points here:

- Most AIs require high volumes of well-structured data and cannot make accurate decisions or predictions without sufficient volumes of high-quality, clean data.

- AI is a new and rapidly evolving technology. Datasets produced even ten years ago are now outdated and fail to reflect changing social, cultural, and economic trends. For example, old datasets fail to represent darker-skinned individuals and women.

- The internet is often seen as an ideal source of open data but comes with numerous risks attached.

- Bias is also created in the data labeling process. Annotators must be aware of their biases and blind spots. Eliminating bias and other issues rely on creating accurate, representative datasets.

- The skills and expertise of data labelers, annotators, and human-in-the-loop teams are paramount.

- AIs should be tested vigorously, audited, and monitored as frequently as possible.

- AIs deployed in high-risk situations, e.g., involving human rights, sensitive decisions, health, or the environment, should receive more detailed attention.

The involvement of diverse and representative human teams in AI projects is crucial to preventing the impact of bad AI. Larger organizations can’t afford to run AI projects in a closed shop and expect accurate results.

Emphasizing AI as a Force of Good

The negative impacts of AI are the subject of great debate, but its progress is relentless, and these issues may yet prove to be ‘bumps in the road.’ However, AI teams and ML practitioners of every stripe must prioritize humans every step of the way, especially when AI is used to make serious decisions that impact health, human rights, the environment, etc. This is becoming mandatory as AI regulators set out rules for how companies and public institutions should handle their AI.

In recent years, there has been evidence that AI is becoming increasingly democratic, which may prove instrumental in shifting power from big tech to smaller-scale, grassroots AI projects.

Formerly, the immense computing power required to train and deploy sophisticated AIs ensured that only the wealthiest companies could wield the technology. Today, accessibility to AI is improving, which is extending its reach.

In addition, emerging AI economies in Africa, Asia, and other parts of the world accelerate AI’s democratization.

As AI disseminates from elite western institutions to others worldwide, it will inevitably become more diverse, as will the datasets, methodologies, and processes used to shape the AI lifecycle.

The more diverse the field of AI becomes on a human level, the more diverse it will become at a technological level.

Aya Data is the largest provider of data annotation services in West Africa.

We want to create thousands of good jobs in data annotation and develop the first centre of AI excellence in the region, bringing the benefits of AI to where they can make the biggest impact. By spreading the positive impacts of AI and promoting diversity, inclusivity and nuance in the training process, we can mitigate the risks of AI and emphasize the benefits.