AI chatbots were once a fringe technology but are now ubiquitous. Some 58% of B2B businesses and 42% B2C use chatbots in some form and 67% of global consumers had some interaction with a chatbot in 2021.

Whilst chatbots are primarily used to assist with customer service enquiries, retail spending via chatbots is set to reach $142 billion by 2024, a massive increase from just $2.8 billion in 2019.

In fact, chatbots are the fastest growing communication channel in the world. Innovative chatbots are being designed to assist those with Alzheimer’s and other neurodegenerative disorders. Chatbots are even employed in post-stroke and traumatic brain injury therapy. In other words, chatbots aren’t just used in commercial contexts.

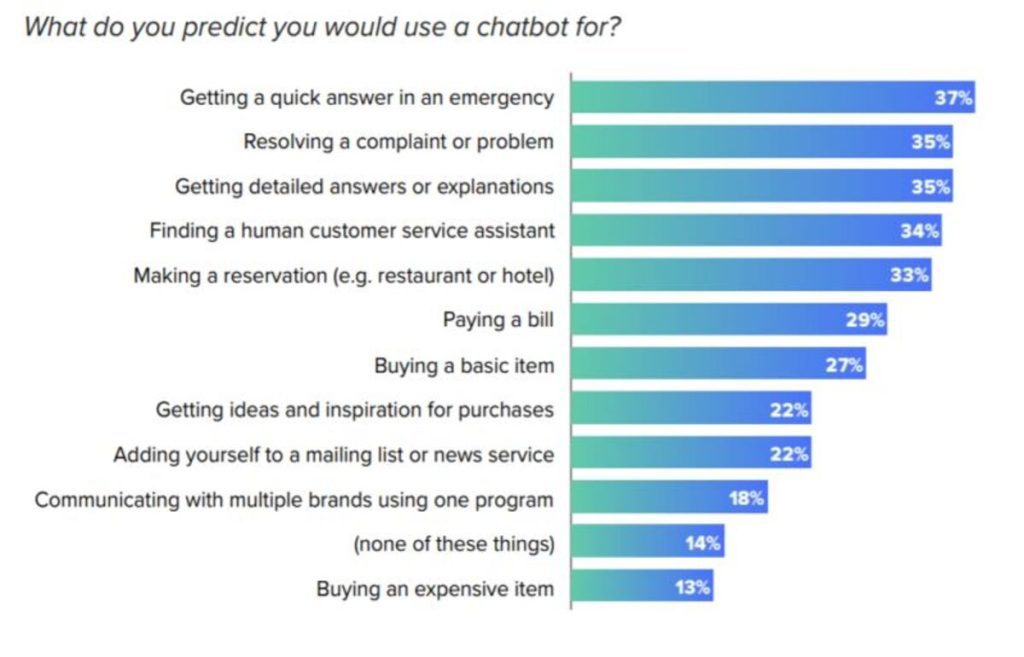

From the below graph we can see that intent for chatbot usage is high across a wide variety of use cases.

As such, it’s perhaps unsurprising that business investment into chatbots has surged in the last 2 to 3 years. There are various methods to create chatbots, but what are some best practice techniques to train them and what data do you need to create the best AI chatbots?

Key Terms: Utterances, Intent and Entities

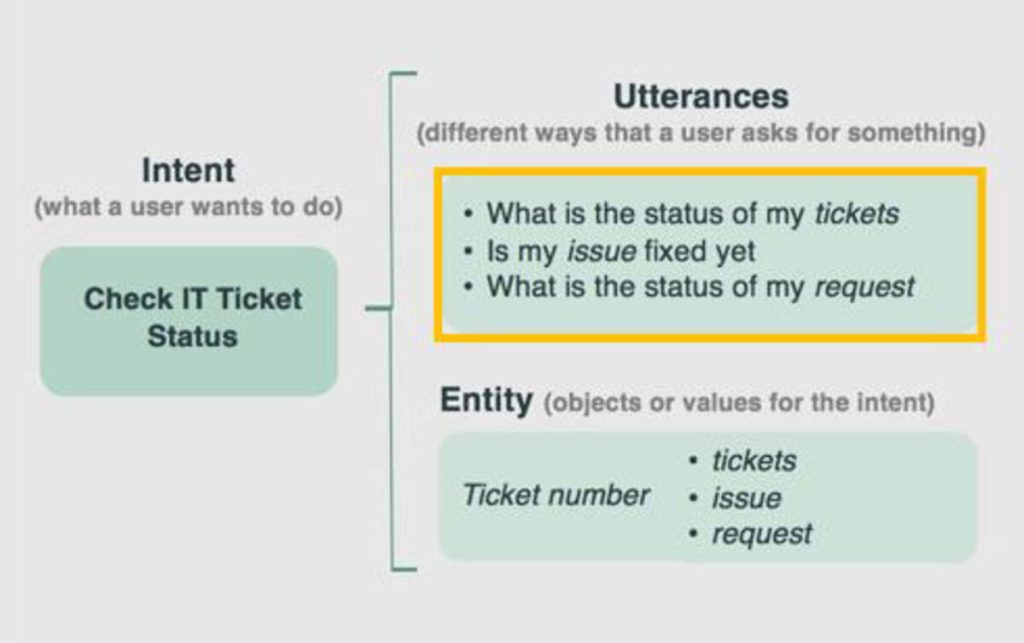

There are 3 key terms to understand in chatbot design and training; utterances, intent and entities.

- An utterance is something a user might say to the chatbot

- The intent is the intended meaning of the utterance, often encapsulating an instruction, e.g. “what is the weather like today in Tokyo?”

- Entities, which are keywords that add context to the intent. For example, ‘weather,’ ‘today’ and ‘Tokyo’ are three relevant entities to the above phrase.

Another important component is the context, e.g. the user’s profile, their location and the time of day. “What is the weather like today?” would only be answerable if the bot has access to the user’s location, otherwise it would have to ask.

Constructing a high-performing chatbot relies on a mixture of human data labelling and automated NLP training. Human intervention is usually required to label specific intent modifiers and entities, ensuring that the chatbot is tailored to its use case. Meanwhile, training on larger over-arching datasets helps the chatbot form a natural understanding of general language use.

Using Contextually Relevant Data

There are a number of open-source NLP data sets and resources available for training chatbots and other NLP applications, including:

- ChatterBot, an ML-based conversational dialogue engine in Python.

- The WikiQA Corpus, an open-source set of question and sentence pairs. Uses Bing query logs and Wikipedia pages.

- Yahoo Language Data, question and answer datasets compiled from Yahoo Answers.

- Ubuntu Dialogue Corpus, some 1 million conversations extracted from tech support chat logs.

- Twitter Support, over 3 million tweets and replies from major brands.

- HotpotQA, a question-answering dataset featuring natural questions

- Cornell Movie-Dialogs Corpus, featuring dialogues extracted from movies.

Many of the corpus-style datasets are only really suitable for experimental chatbots and testing hypotheses. There are also numerous managed or semi-managed NLP platforms for building conversational interface and chatbots, such as Google Dialogflow, IBM Watson, AWS Lex, Azure bot, RASA, and Cognigy.

Chatbots Should Be Specifically Tailored to Their Use Case

The best chatbots are fed inputs from use case-specific conversational data. For example, KLM used 60,000 genuine questions from customers to train its BlueBot chatbot, which resulted in much better outcomes compared to if it was fed inputs from generic datasets. The Rose chatbot at the Las Vegas Cosmopolitan Hotel was developed following a 12-week consultation on customer pain points and typical questions concerning their services.

Chatbots should also be scripted in accordance with the use case, which will dictate whether they’re informal, formal, use longer, more complex words, crack jokes, etc. The best chatbots are constructed using datasets unique to the business or other similar businesses in the same industry. This ensures that representative intent, utterances, and entities are used in training datasets.

Chatbots can also be programmed with real-time sentiment analysis functionality, allowing businesses to route frustrated customers to live agents. This also provides an opportunity for customer segmentation, enabling businesses to segment unhappy customers from satisfied customers, then following up unhappy customers with feedback forms or promotions to lower customer churn.

Testing Chatbots

Chatbot development involves near-constant reiteration. Training shouldn’t cease once a seemingly accurate chatbot is produced in a training environment, as dramatic changes can occur once that chatbot is exposed to real data. There are some common fixes for training problems such as cleaning and enhancing the data using deeper annotation and data labelling techniques.

The following chatbot testing tools can also help:

- Botium, an automated product chatbot testing platform that provides numerous Q&As for advanced regression testing. Contains powerful datasets for testing chatbot performance and understanding as well as security.

- Zypnos, a chatbot testing tool for automated regression testing. The tool runs repeated tests and displays results. The code-free platform sends reports after testing sessions.

- TestMyBot, a free and open-source library that runs with Docker and Node.js.

Summary: What Data do You Need to Create the Best AI Chatbots?

The very best AI chatbots require semantically relevant training data that fits the intended use of the chatbot. A chatbot for a holiday company will require utterances and entities relevant to holidays, including common questions, issues and requests. A chatbot for banking will require utterances and entities relevant to common banking issues, payment queries, etc, and so on and so forth.

Utilising manually prepared datasets specific to the industry and use case combined with wider, more generic datasets for accurate NLP produces accurate, natural chatbots which also have some form of personality. Aya Data provides the natural language processing services required to build super custom chatbots.

Book your free consultation to discuss how we can help you create the perfect chatbot.