Machine learning (ML) and artificial intelligence (AI) models still largely require the input of humans to train, tune and test models to ensure their accuracy and performance. When humans are positioned in the ML and AI loop or cycle, they can be referred to as humans-in-the-loop (HITLs).

HITL means leveraging both human and machine decision-making to train, optimize and test machine learning models. Beyond deployment, humans can audit AI performance and monitor for unexpected results.

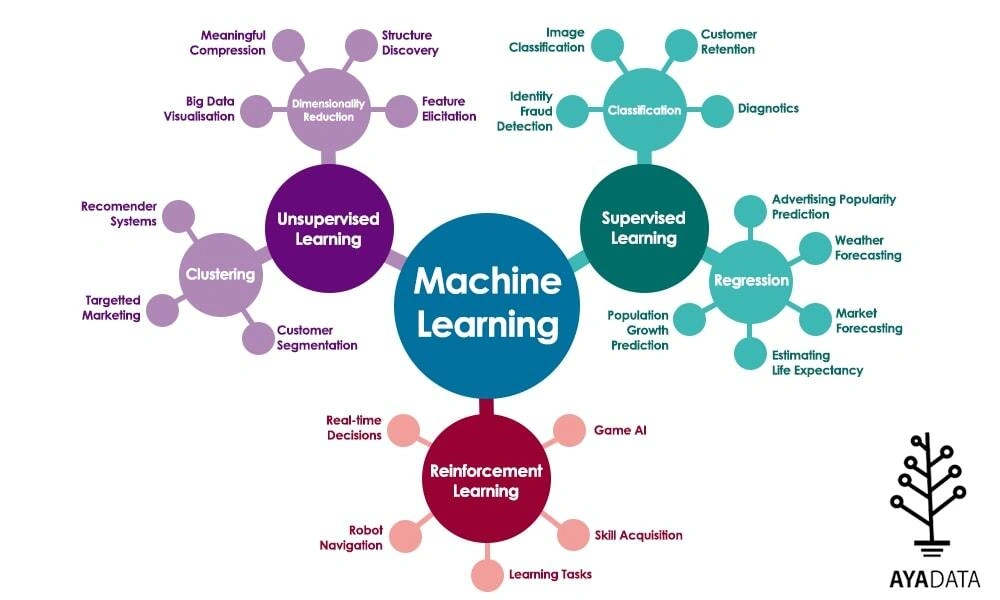

HITLs are required for both supervised and unsupervised learning, but the role of humans is more prominent in supervised learning, where humans have to label data to provide algorithms with the “target” for their learning activity.

Human-in-the-Loop (HITL) Defined

AI has become defined by removing human involvement.

AI and ML models replace valuable skills, whether organizing colossal volumes of data, operating machinery 24/7, or performing other tasks beyond practical human cognitive or physical ability. But despite replacing or augmenting human skills and abilities, all AI and ML models require humans to work in the first place.

Humans play a pivotal role in the AI and ML lifecycle. AI draws upon the capabilities of both humans and machines, combining the value of machine decision-making with the value of human decision-making. The term “human-in-the-loop” defines these roles and interactions.

A human-in-the-loop influences AI and ML models to alter their predictions, performance, and uses. The role of HITLs will change as AIs become increasingly independent, but humans are currently central to the research, development and deployment of AI.

1: HITLs in Supervised Machine Learning

In supervised machine learning, HITLs start by labeling and annotating training data.

This is incredibly important for showing the model the target on which to base its own predictions. The quality of training data has a massive impact on the quality of the model, as described by the familiar term “rubbish in, rubbish out.”

Building training data sets also enable ML practitioners to accurately describe the ground truth, which is the objective reality that underlies data. Without high-quality training data, a model cannot hope to make effective predictions when exposed to new unannotated data.

In addition to building training sets and feeding them to the algorithm so it can learn, HITLs tune and optimize the model (e.g., hyperparameter tuning) and test it on test sets held back from the original training data.

2: HITLs in Unsupervised Machine Learning

Unsupervised learning doesn’t necessarily require human involvement during the actual learning process, but they still need to expose the model to data in the first place.

Supervised vs unsupervised machine learning

In some situations, a dataset will be supplied to the unsupervised algorithm for exploratory data analysis, such as clustering. In other situations, the model will be shown where to look for data, such as a series of web pages. Unsupervised models deployed in the real-world, such as within autonomous vehicles, will ingest data from cameras and environmental sensors. In any case, the model is typically built and optimized for the intended target – which requires HITLs.

Unsupervised models need to be tuned and optimized to produce accurate results and influence the bias-variance trade-off. For example, unsupervised algorithms like k-means clustering and KNN (k-nearest neighbor) possess hyperparameters that humans need to tune to increase performance.

In reinforcement learning, which differs from both supervised and unsupervised learning, humans set reward functions to guide the model’s trial and error, helping the model obtain the most suitable result for a given situation.

3: HITLs in Testing and Optimizing AI and ML Models

Once a model is trained and deployed, human involvement shifts towards testing and ongoing optimization.

Some AIs require more intensive testing and monitoring than others, for example, where they interact with human health, human rights, the environment, and other sensitive topics. For example, recent EU legislation has set about a number of “high risk” AI applications where near-constant monitoring is required. This places even greater emphasis on humans-in-the-loop.

Today, businesses and organizations should take a risk-based approach to guide how they integrate human judgment and intuition into their machine learning value chains.

Supervised vs unsupervised machine learning

In some situations, a dataset will be supplied to the unsupervised algorithm for exploratory data analysis, such as clustering. In other situations, the model will be shown where to look for data, such as a series of web pages. Unsupervised models deployed in the real-world, such as within autonomous vehicles, will ingest data from cameras and environmental sensors. In any case, the model is typically built and optimized for the intended target – which requires HITLs.

Unsupervised models need to be tuned and optimized to produce accurate results and influence the bias-variance trade-off. For example, unsupervised algorithms like k-means clustering and KNN (k-nearest neighbor) possess hyperparameters that humans need to tune to increase performance.

In reinforcement learning, which differs from both supervised and unsupervised learning, humans set reward functions to guide the model’s trial and error, helping the model obtain the most suitable result for a given situation.

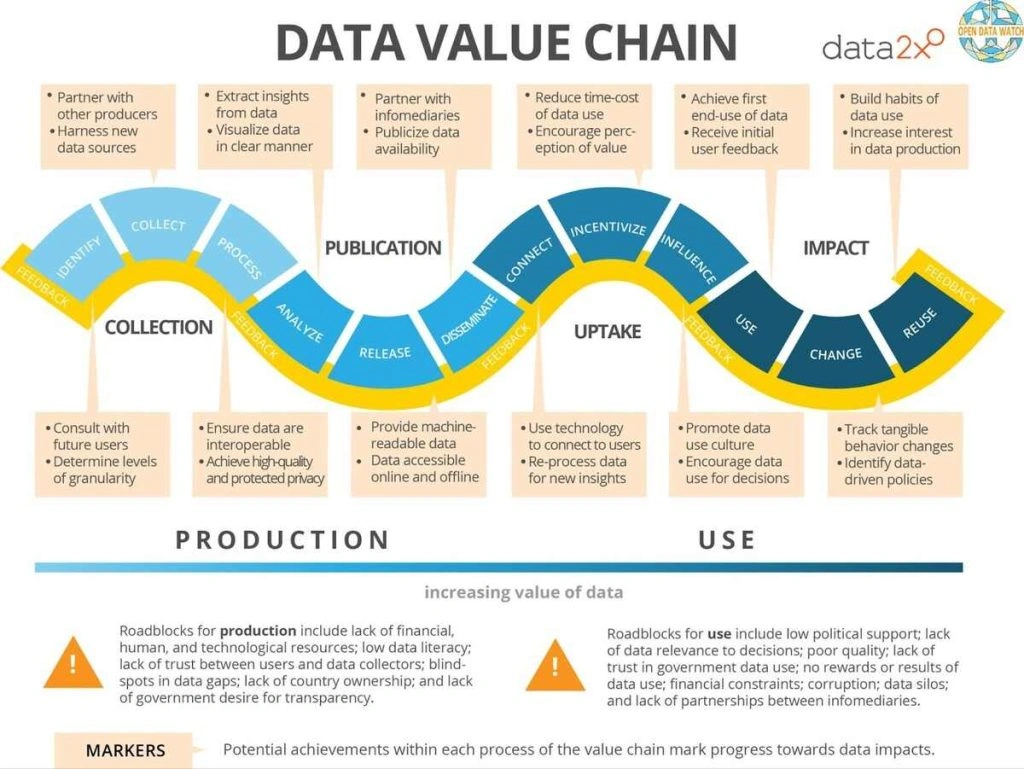

The data value chain

As we can see from the above diagram of the data value chain, human involvement is imperative in numerous AI and ML-related processes and functions.

Why Are Humans In The Loop Important?

AI and ML rely on humans.

Until we build self-healing AIs external to mutable structures like a computer system or electrical appliances, humans-in-the-loop are an inevitable component of AI and ML.

Humans-in-the-loop is critical to the following processes:

1) For Training Models

HITLs create training by labeling features and adding classifiers. This is absolutely essential for supervised machine learning models and involves everything from bounding box and polygon annotation to semantic segmentation, LIDAR annotation, video annotation, keypointing, and more. HITLs can also perform feature engineering to create a larger or higher-variance dataset from existing data.

2) For Tuning and Testing

Humans then have to tune and test the resulting model. This involves testing the model’s accuracy against test sets and performing hyperparameter tuning to negotiate the bias-variance trade-off. Most types of machine learning models require tuning prior to deployment.

3) After Deployment

Humans remain in the loop after the model is deployed. If humans are required to operate the model, this is sometimes called “human-in-the-loop design.” Stanford uses an example of a model designed to simplify legal documents into plain language. The model features a slider that enables the user to choose the level of simplification. This type of AI-assisted model requires a HITL. Another example is a medical diagnostics model that requires humans to make the last decision, either validating or denying the model’s predictions.

4) Ongoing Monitoring

Most complex AI models are not ‘set and forget’. The more complex and high-risk the model, the more important humans are. For example, a recommendation system used to suggest playlists to Spotify users is pretty benign and low-risk, meaning constant monitoring is probably unnecessary except to ensure the model’s general performance. Contrastingly, models used to predict crime and potential criminals involve people’s human rights, requiring rigorous monitoring (read out post about AI policing here).

It’s worth mentioning that humans in the loop are primarily defined as those involved in training, tuning, and testing, as per the machine learning loop or lifecycle. The end user can also be defined as a human-in-the-loop, however, albeit in a slightly different way. Like many machine learning buzzwords, human-in-the-loop is not simple to define!

The Benefits of Humans In The Loop

Humans in the loop multiply the capabilities of ML and AI. Data labeling and annotation are pivotal to training an accurate and effective model, particularly when dealing with complex subject material. Data labelers often need to work alongside domain experts to create accurate, representative datasets that capture the necessary features and classes.

For example, creating training datasets from medical diagnostic images may require doctors, radiographers, or other health professionals that can accurately discern the features of different diseases, ensuring labels are placed appropriately.

After training, HITLs tune and test models to ensure their long-term performance. This helps reduce bias and mitigate risks.

Some benefits of humans in the loop include:

- Creating training and test data that correctly represents the ground truth.

- Performing feature engineering to boost datasets.

- Ensuring algorithms understand their inputs.

- Training and tuning the model.

- Negotiating the bias-variance trade-off.

- Monitoring and auditing models for long-term accuracy.

- Eliminating bias and ensuring data representation.

- Navigating ongoing ethical issues.

- Ensuring safe and compliant AIs.

- Aligning a project’s goals with the end user.

- Investigating potential issues proactively and reactively.

This is by no-means an exhaustive list. The value of an experienced HITL team is essential to building effective, accurate, ethical and compliant AIs.

The Risks of Poor ML Decision-Making

There are numerous high-profile examples of poor decision-making in the ML cycle. Models have failed due to human oversights or failure to properly test, monitor, and audit performance.

One example is Apple’s sexist credit card. The ML algorithms behind it granted men higher credit limits than women, even when women earned more. The datasets fed to the algorithms were likely inaccurate or poorly constructed.

Apple’s controversial credit card

One potential explanation is that men are often more likely to take out joint loans in their own name in married couples, which boosts their credit scores.

If the datasets fail to take such nuances into account, the resulting model will be inaccurate. In this case, the result of that inaccuracy is bias and discrimination.

There are many other examples too, such as bias issues in AI policing. The lack of diversity in datasets has also caused numerous issues that might have been mitigated with strong human-in-the-loop strategies.

Involving humans in sensitive and high-risk AIs is the most effective way to reduce risk when dealing with models that demand high nuance.

How Do Humans Keep Control of AI?

AI is excellent at solving simple problems at a tremendous scale, but we’ve only recently created AIs capable of “artificial general intelligence” (AGI). AGI refers to AI intelligence that is broadly analogous to human intelligence, which requires skills and abilities that AIs can generally apply to cross-domain, multi-modality tasks.

For example, humans use some of the same abilities to speak as they do to sing, but currently, an AI trained to speak will function differently to one trained to speak, with little-to-no capability to ‘reskill’ speaking skills to singing skills and vice-versa.

AGI goes beyond sentience to invent AI that learns and re-learns depending on what task is put in front of it, with minimal human influence.

As we build increasingly complex AIs, the role of HITLs will change. Perhaps HITLs will become the ‘guardians’ of AI, who will monitor, audit, and inspect AI performance, verify compliance and possibly even enforce the law to shut down and destroy programs.

Who will police AIs in the future?

Numerous authorities are now calling for governments to regulate AI to ensure models aren’t left to make potentially life-changing decisions without close human inspection. With AGI and other forms of powerful AI intelligence, such regulation will only become more important.

AI and the End Users

While an AI will happily crunch data when left to its own devices, humans need to consider the end users – those who are intended to benefit from the AI. In some cases, the users are simply the research team training the model, which is especially common when models are trained for academic purposes (see our post on using AI to decipher ancient languages).

But usually, the end user is someone separate from the machine learning process. For example, the end user of an eCommerce recommendation system is the customer. The end user of a recruitment AI is the prospective employee, and the end user of a medical diagnostics model is the patient. The mechanisms of control we impart on AIs must scale with the domain and intent of the model.

“The nature of the control varies with the type of system and opportunity for intervention. As more reliance is placed on the technology itself to achieve the desired outcomes — safety, security, privacy, etc. — the more stringent, and complex, are the requirements for assurance.” – Professor John McDermid OBE FREng, Director of the Assuring Autonomy International Programme

In short, keeping humans in the loop helps mitigate risks when training and deploying high-risk AI and ML models. For example, an AI-assisted medical diagnostics model might make a diagnosis prediction, but doctors and ML practitioners will work together to ensure the short and long-term accuracy of the model.

HITLs at Aya Data

Aya Data provides human-in-the-loop services across the machine learning project lifecycle.

Our skilled data labels are experienced in the domains of natural language processing (NLP) and computer vision (CV). We understand the role of human intuition in machine learning, including the intersection of social and ethical issues that shape sensitive projects.

Aya Data has proven experience in sectors and industries where privacy, anonymity, and ethical issues are imperative. For example, Aya Data can collect anonymous and compliant medical images in collaboration with the Department of Radiology at the University of Ghana Medical Center (UGMC), and agricultural images through a partnership with Demeter Ghana Ltd.