Mind-reading AI may seem like a dystopian concept, but it would revolutionize the lives of those who’ve lost their speech to paralysis and brain injuries.

Electroencephalogram (EEG) technology, which measures electrical signals using electrodes connected to the scalp, has been used to help those with paralyzing stroke injuries or completely locked-in syndrome (CLIP) for about a decade.

However, most research stops short of reading someone’s mind to translate complex thoughts into information without them having to move a muscle.

That’s changing, and today, machine learning is taking mind reading to the next level.

Mind Reading AI

To read someone’s mind, you need a signal. Reading body language is one way to access someone’s mind, but what about extracting thoughts and concepts straight from the brain?

To do that, you need to take signals from the brain itself, which involves measurement technologies such as:

- Magnetoencephalography (MEG), which measures the magnetic fields caused by electrical activity in the brain. MEG can measure absolute neuronic activity.

- Electroencephalography (EEG), which involves measuring electrical activity.

- MRI, which measures blood flow in the brain to gauge activity.

All three technologies have been combined with machine learning to attempt to ‘read minds’ based on brain activity. The general idea is that if we can successfully train an algorithm to attribute brain activity to thoughts in a training dataset, then it should be able to replicate that on new thoughts.

The concept is quite simple and fits machine learning well, as researchers can collect data using EEG, MEG and MRI to build the dataset to train the model.

The tricky thing is, the above non-invasive brain measurement technologies aren’t precise enough to describe complex neural activity – but that might change if new brain measurement technologies are developed.

Using MEG and EEG to Read Minds

Meta used MEG, and EEG used datasets from 169 people to train an AI that could deduce the individual words that people were listening to from a list of 793 set words.

The problem with this study is that the AI would generate a list of 10 words, which would include the word the individual was hearing just 73% of the time. Still, it’s sufficient for proof of concept that AI can ‘hear thoughts’ using non-invasive measurements.

In terms of MRI-based models, researchers in 2017 used hours of data from participants watching video clips to train a model that could predict the category of video someone was watching with 50% success. There were 15 categories, like “airplane, bird, exercise,” etc.

The study also attempted something rather innovative by recreating what participants were thinking by drawing their mental images with pixels, and some results do resemble that of the original image – at least in terms of the rough shape of the image.

In the future, such technology could visually translate images from the brains of those who have lost their ability to speak.

AI For Image Generation

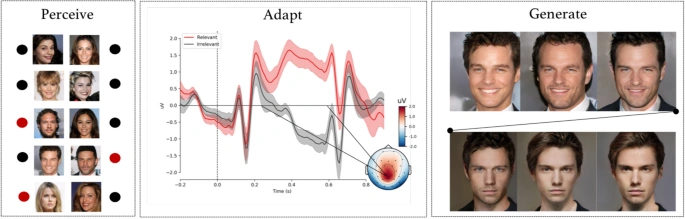

A third study in 2020 involved participants viewing hundreds of AI-generated images of faces while researchers recorded their EEG signals. They were instructed to look for specific faces. A neural network inferred whether the participants’ brains detected images that matched the subjects they had been instructed to look for.

The resulting model could predict the face the participants were thinking of, and could also generate images based on participants’ reactions to the images they were shown.

So, the model essentially generates new images from individuals’ dispositions to existing images. If participants concentrated on a non-smiling face, the model could apply that to an image to create a non-smiling image, for example.

“The technique does not recognize thoughts, but rather responds to the associations we have with mental categories,” said the study’s co-author Michiel Spapé

AI To Extract Words and Sentences

Perhaps the most successful of these attempts was a recent study at the University of Texas, where researchers used functional MRI (fMRI) data across three language-processing brain networks – the prefrontal network, the classical language network, and the parietal-temporal-occipital association network.

The participants listened to 16 hours of narrated stories to train the model to understand sequences of words. Uniquely, this study aimed to decode the meaning of entire concepts or sequences of words, rather than assessing specific words.

Here’s an example:

One story participants were shown in the scanner went as follows: “that night, I went upstairs to what had been our bedroom and not knowing what else to do I turned out the lights and lay down on the floor”.

The AI translated the brain patterns produced when participants listened to this passage as: “we got back to my dorm room I had no idea where my bed was. I just assumed I would sleep on it but instead I lay down on the floor”.

The model uses the training set to infer meaning and does a good job at decoding abstract meaning from new brain data.

Researchers here agree that such studies are limited primarily by the detail of brain data on offer. Non-invasive measurements only provide so much detail, so even the most complex model would likely fall short of what’s required to read minds.

However, the concept is there. Researchers hope these technologies will soon be able to read the minds of those with brain injuries and diseases such as MLS to animate their thinking. This builds on other research, such as an AI model that enabled a paralyzed man to feed himself with a knife and fork based on thoughts alone.

While many might quickly assume that advanced mind-reading AI is a terrible idea, current tech is only successful when trained on hours of data and is limited by non-invasively collected data.

Summary: Can AI Read Our Minds?

Sort of – it depends on your definition of mind reading. AI can make decent guesses about thoughts, ideas, concepts and words, and its accuracy is sure to improve.

Right now, these technologies are limited by non-invasive brain data collected via EEG, MEG and MRI.

If we can extract better-quality or more detailed data using these techniques, or develop new techniques for extracting data from the human brain, then accurate mind-reading AI is a certainty.