‘Ground truth’ is somewhat of a confusing machine learning buzzword. Discussions of the ground truth naturally touch on areas such as bias, representation, and objectivity, and it’s a concept worthy of discussion.

One of the main pitfalls of AI is that it’s often assumed to be inherently objective. The cold, objective rationalism of AI manifests in sci-fi characters and robots like Walter and David in Alien or even the machines in the Matrix. In these situations, the characters often behave as they do because they have a superior understanding of the ground truth – they are capable of superhuman objectivity.

This presents an epistemological and philosophical conundrum for AI design that has not gone unstudied in modern philosophy, as Google’s Cassie Kozyrkov states:

“AI cannot set the objective for you — that’s the human’s job” and “machine learning’s “right” answers are usually in the eye of the beholder, so a system that is designed for one purpose may not work for a different purpose.”

What Is Ground Truth in AI?

Ground truth in machine learning and AI is a term borrowed from statistics that was originally borrowed from meteorology. In meteorology and remote sensing, the ground truth refers to objective measurements taken from the ground, which are then used to validate and calibrate remote sensors and other technologies. A ‘pixel’ of an image is compared to empirical measurements taken on-site to help supervise the classification of images and features, lowering error rates.

In machine learning, the meaning of ground truth is similar.

The ground truth is the fundamental truth underpinning training and testing data, and the reality you want your eventual model to predict. Ground truth in AI and ML encapsulates the fundamental nature of the problem that is the target of the machine learning model and is reflected by the datasets and the eventual use case.

The ground truth of your dataset should be empirically and methodologically robust – you cannot ‘train out’ fundamental flaws in your training and testing data if your datasets fail to represent the ground truth adequately.

In other words, if your datasets essentially incorporate falsehoods, so will your model.

The model will inherit the subjective decisions of its makers, and these decisions can have both intended and unintended consequences.

Subjectivity in AI: Cat Classification Example

Here is an example of how AI systems are built around subjective interpretations that change with the nature of the problem space.

Below are six images, the goal is to classify them as simply “cat” or “not cat”. 1, 3, and 4 and easy to classify as cats, but 6 often poses a conundrum; this is obviously a cat, but the gut instinct might be to hesitate and stutter as to whether it’s a ‘big cat’. While big cats are cats, they’re also defined as the five living members of the genus Panthera; the lion, tiger, jaguar, leopard, and snow leopard. So, does this tiger really belong with the other domestic cats?

According to the task, there is no option to define 6 as anything else but a ‘cat’, thus categorizing it with its lesser domesticated relatives. Suppose the model is designed to classify cats objectively from other animals. If that was the case, labeling the tiger as a ‘cat’ is sufficient, but consider if the model’s purpose is to classify which of these animals is dangerous to keep as a pet in its adult form. Then, labeling the tiger in the same category as other domesticated cats is clearly wrong.

As shown, the purpose of the model and its related data is informed by subjective decisions – AI ‘bakes in’ subjective interpretations.

An Example of Ground Truth: Maize Crop Disease

Here’s a real-world example of how ML teams can negotiate and navigate the ground truth.

Aya Data worked with Demeter Ghana, a sustainability and agricultural services provider, to train an image classification model that detects maize diseases. You can see the case study here. This problem is underpinned by objective, measurable criteria – the diseases themselves. Most maize diseases show up as lesions and markings on the leaves of the plants, but it’d be crude to assume that a lesion of any kind is definitely a maize disease (e.g., the marking may be caused by sun damage or trauma). Further, there are many maize diseases, and most feature their own trademark lesions.

Before creating the dataset, Aya Data worked with skilled agronomists in Ghana to establish the ground truth. This enabled us to undertake an evaluation of the diseases and their respective characteristics.

We were well-positioned to source relevant and accurate data and check that images encapsulated the ground truth. In other words, we were able to secure the ground truth in our dataset by working with those with domain knowledge.

It’s still important to recognize that this process involves subjective judgment and interpretation – without studying the leaves in a lab environment, growing cultures, etc., it’s impossible to discern each disease with 100% accuracy. However, the subjective judgment of those domain specialists was sufficient for the use case.

In other situations, e.g., medical imaging, it may also be necessary to correlate images with lab results or other empirical data to establish that labels truly represent the ground truth.

Ground Truth and Bias

Ground truth and bias are intrinsically linked. While the ground truth may be underpinned by absolute truths, e.g., measurements taken with perfectly-calibrated scientific instruments, this is usually not the case. In many scenarios, the ground truth is simply what the project owners deem ‘good enough’ to create a good-functioning model.

Failing to take the ground truth seriously, in combination with other forms of bias, can lead to the failure of an AI or ML project.

Amazon fell into this trap when training recruitment AIs – the eventual models were found biased against women because they were trained on vast quantities of data where men were over-featured in training data that was wrongly deemed the ground truth. Unfortunately, the “ground truth” here (encapsulated by historical male over-emphasis in tech roles) was simply incorrect for the use case, unobjective, and deeply influenced by poor subjective judgments.

When AI is designed to make important human-centric decisions, the ground truth is especially important. For example, consider an AI system that labels transactions as fraudulent. If such a model is created with poorly conceived fraud definitions, then the system might falsely accuse individuals of fraud or financial crime.

As a result of these kinds of situations, measuring model bias where the ground truth is vague or absent has become a critical issue in machine learning. This paper authored by Google data engineers and AI ethics scholars argues:

“If the ground truth annotations in a sparsely labelled dataset are potentially biased, then the premise of a fairness metric that “normalizes” model prediction patterns to ground truth patterns may be incomplete”.

The authors also highlight how ground truth is heavily dependent on the labels chosen for the project.

Finding the Ground Truth In Machine Learning

While it may be tempting to assume that the ground truth is simple to determine, this is often not the case. If there is a fatal misunderstanding over the ground truth, the eventual model will fail. The ground truth also cuts to the heart of issues of bias and representation – what if the objective reality is not encapsulated by a pre-existing dataset deemed to be the ground truth?

By definition, the ground truth is determined by measurement and empirical evidence rather than inference and subjective judgment. However, when it comes to unstructured data, establishing the ground truth is not a simple task, and it requires an in-depth understanding of the problem.

For example, it’s often not enough to have a few individuals ‘judge’ a dataset as objectively representative, especially if they aren’t qualified in that domain.

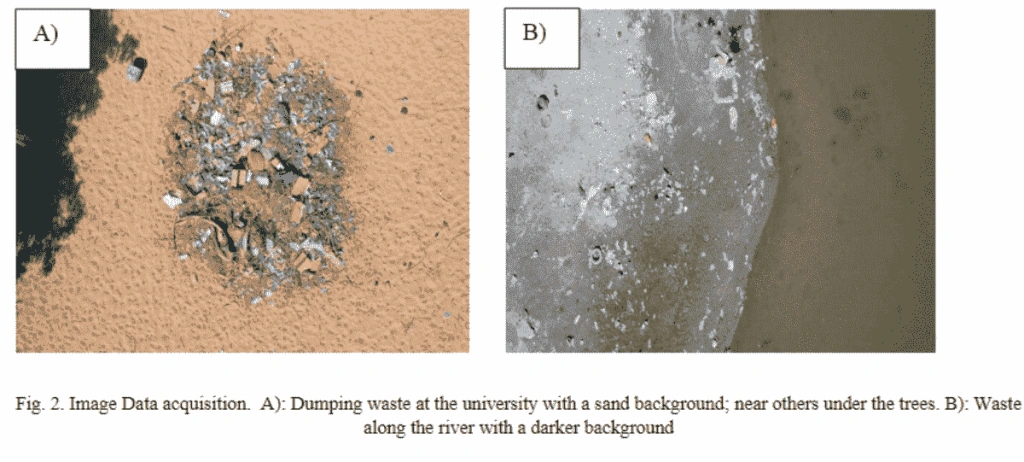

Here’s an example: researchers trained an unmanned drone to detect illegal dumping, but found that tree cover and other unforeseen obscurities caused model inaccuracy. If illegal dumping frequently occurs under tree cover, then training a model on un-obscured images of rubbish will fail to produce accurate results when deployed.

A machine learning team simply instructed to ‘train a model to detect illegal dumping’ may have no idea that illegal dumping frequently occurs under tree cover, and thus, any training dataset must contain images of this to encapsulate the ground truth.

Similarly, in Aya Data’s maize crop disease example, the ground truth is encapsulated by the crop diseases. Therefore, the dataset must depict those crop diseases with maximal objective accuracy. It’s not sufficient to simply label an imperfect maize leaf as ‘diseased’. Damage caused to a leaf may be arbitrary or caused by something unrelated to diseases, such as water or sun damage.

While it’s not always possible to foresee such nuances, machine learning teams must work collaboratively to define the practical problems they’re likely to encounter before sourcing images. This might include launching further research into the problem of working with domain specialists to establish the ground truth.

Summary: What You Can Do

AI and ML are not pure, objective sciences. While algorithms and equations supply logic to the discipline, humans are the ones pulling the strings. In supervised machine learning, the model is a semi-autonomous ‘puppet’ that inherits much of the subjective faculties of its human designers.

Understanding the human subjectivity of AI systems is crucial for building systems that really work for people. After all, many of the most exciting impacts of AI and ML benefit real people. To help people through the use of ML, it’s essential to consider the field as broader than algorithms and computing power – this is a discipline that extends into modern philosophy, ethics, and epistemology.

The supposed objectivity of AI is taken for granted. AI systems are better understood via a series of questions that emphasize the need for nuance regarding subjectivity, objectivity, and the ground truth. When planning/building/researching AI and ML models, consider the following questions:

- What teams/collaborators are involved in building the system?

- What are their subjective goals?

- How have they defined the ground truth in their datasets?

- Would others agree with their definitions of the truth?

- Who/what is the application/system intended to benefit?

- Was domain knowledge applied?

- Do labelers/annotators have domain training/knowledge to ensure the ground truth is represented/replicated in datasets?

- How could mistakes manifest? (e.g., some ML projects have resulted in criminal and civil offenses, prejudice, and discrimination)