Generative AI writes, summarises, and even debates – but it doesn’t actually “know” anything beyond the data it was trained on. It’s a perennial problem.

Large language models (LLMs) generate text by predicting the most probable next word, but without access to real-time or domain-specific information, they produce errors, outdated answers, and hallucinations.

An AI model trained on data up to 2023 has no clue what happened last week in financial markets or the latest updates in medical research. That gap between general knowledge and real-world applicability is where retrieval-augmented generation (RAG) can bridge the gap.

RAG fundamentally changes how AI systems operate. Instead of pulling answers from a static memory, RAG-enabled models retrieve relevant external information before generating responses.

This article examines RAG’s current status, how industries are using it, and what challenges remain in implementing its benefits while mitigating risks and challenges.

How RAG Works and Why It Matters

Fundamentally, RAG is about making AI more reliable. Instead of relying solely on what it has “memorised” in its training data, an AI system using RAG actively fetches relevant information before generating a response.

The idea of retrieval-based AI isn’t particularly new, but it wasn’t until 2020 that research from Meta AI, University College London, and New York University brought it to life.

By 2023, enterprise AI applications turned to RAG to boost accuracy and keep responses up to date – without the cost and complexity of retraining models.

So, instead of rebuilding AI from the ground up, companies simply update the databases it retrieves from, ensuring real-time relevance with less effort.

The Main Components of RAG

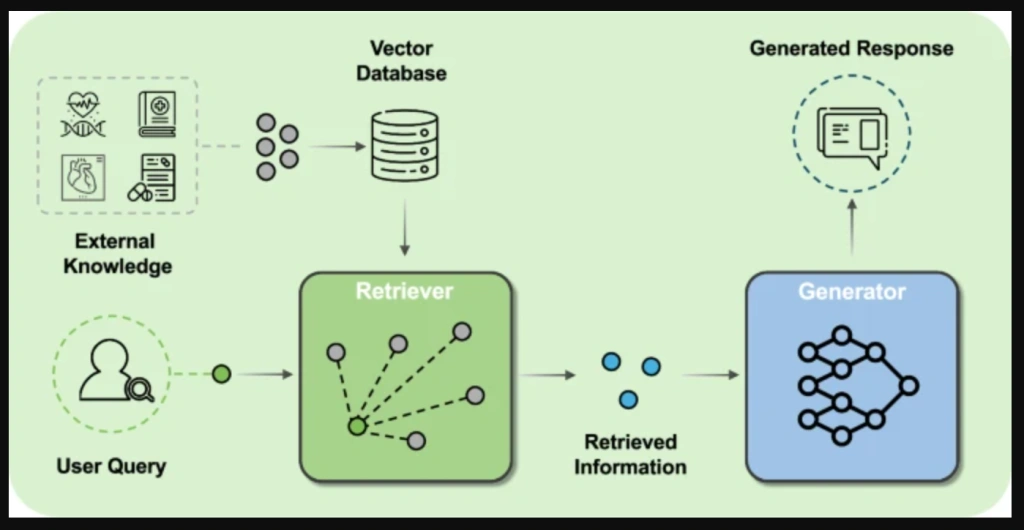

RAG combines retrieval and generation, allowing models to access external knowledge sources. It consists of three key components:

- Retrieval Engine: Searches a structured database, document repository, or vector index to find relevant information based on the user’s query.

- Embedding Model: Converts both the query and stored data into numerical representations (vectors), making them far quicker and easier to match.

- Generator (LLM): Synthesises the retrieved information with its existing knowledge to create a coherent, context-aware response.

Together, these components work to ground AI responses in verifiable external sources.

Understanding RAG: An Analogy

There are many analogies for understanding RAG. Here’s our take:

Imagine a tour guide leading a group through a historic city. They’re experienced, well-trained, and have memorised hundreds of facts about the landmarks, architecture, and cultural history.

For most questions, they have an answer ready – why this building was constructed, who designed it, what historical events took place there. Their knowledge is rich and well-practised, just like an LLM that has been trained on massive datasets.

But then a tourist asks: “Why is that building under renovation?” or “What was the outcome of last month’s archaeological dig?”

The guide wasn’t trained on that information. They might make an educated guess based on past renovations or historical patterns, but without access to real-time updates, their answer is limited to what they already know.

Now, imagine that same guide is equipped with a live feed from historians, city planners, and local news sources. Instead of relying purely on what they remember, they can pull in the latest information on the spot – delivering precise, up-to-date answers instead of educated guesses.

That’s what RAG does for AI. It retrieves relevant, real-time knowledge before generating a response, ensuring the AI isn’t just repeating what it was trained on but actively incorporating the most current, context-specific information available.

Why RAG Matters Now More Than Ever

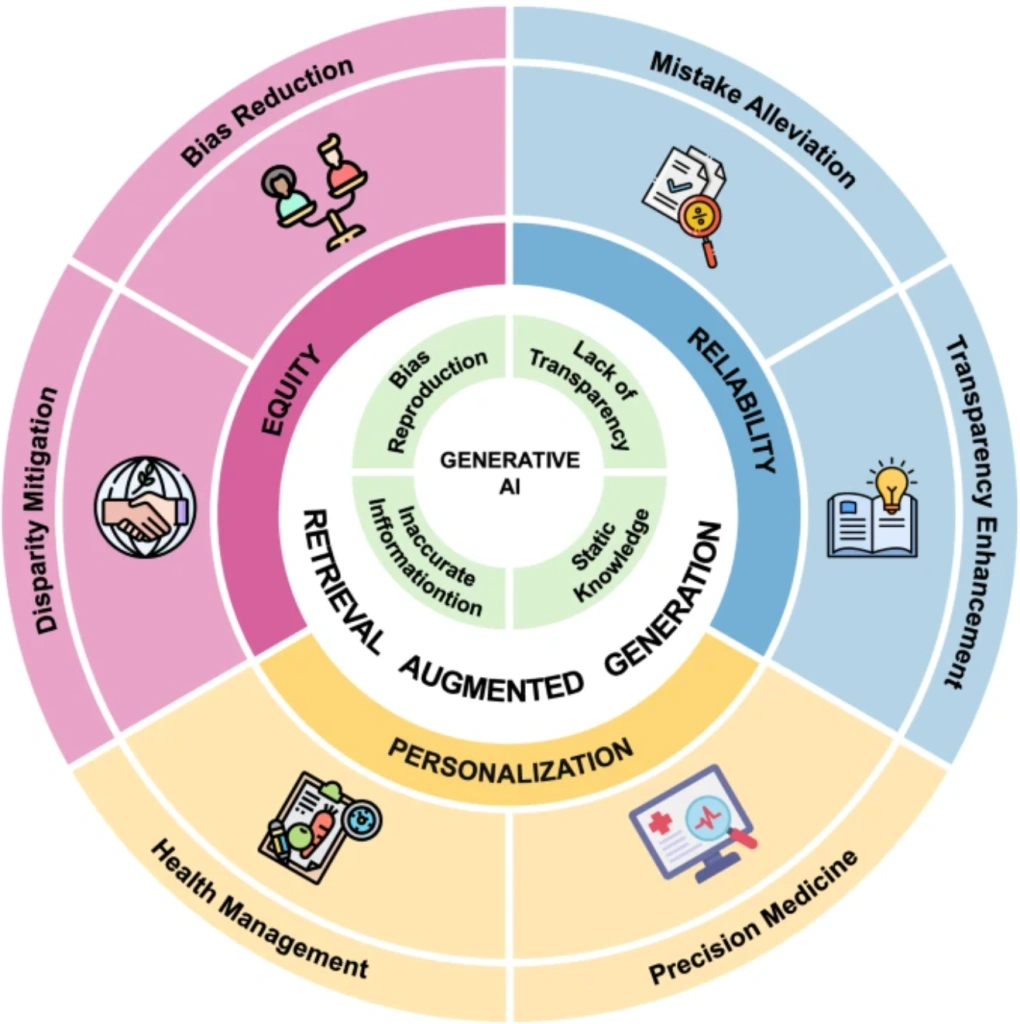

LLMs, for all their power, struggle when dealing with highly specialised or frequently changing information. RAG solves that by enabling AI to:

- Provide real-time answers in dynamic fields like finance, healthcare, and law.

- Reduce hallucinations by anchoring responses in verifiable sources.

- Enhance transparency by allowing AI to cite its sources.

- Adapt to new knowledge without costly retraining.

For many AI applications where context and accuracy are vital, this is transformative:

- A legal AI assistant can now retrieve relevant case law before answering a legal question.

- A healthcare chatbot can pull the latest medical guidelines before making a recommendation.

- A corporate AI tool can pull company-specific policies to provide accurate internal support.

Suddenly, with RAG, AI becomes connected to context and up-to-date knowledge, drastically reducing the chance of false or erroneous results.

The Current State of RAG: Where It’s Making an Impact Today

RAG is a fundamental pillar of enterprise AI architecture. Major players lean on it to build AI systems grounded in real-time knowledge. Here’s a breakdown of why this matters for key AI-driven sectors and industries:

RAG in Healthcare

For medical AI, accuracy is non-negotiable. A generative AI model trained on medical texts from 2023 will quickly become obsolete in 2025. RAG solves this by retrieving research, treatment guidelines, patient cases, etc, before generating a response or making a decision.

A recent study in npj Health Systems (2025) discusses how RAG-powered AI transforms healthcare by integrating real-time diagnostic data, drug interactions, and the latest clinical research, ensuring medical decisions are based on current information.

RAG in Finance

Financial markets change by the second, so static AI models are unreliable at best.

Banks and investment companies have adopted RAG-enhanced AI analysts that retrieve data from live market reports, earnings transcripts, and macroeconomic trends before creating responses and decisions.

RAG in eCommerce

Traditional retail recommendation engines rely on historical user behaviour, but RAG allows AI to make better suggestions by analysing real-time inventory, user reviews, and dynamic pricing data. A Forbes (2025) report revealed that a leading online retailer saw a 25% increase in customer engagement after implementing RAG-driven search and product recommendations.

Imagine searching for a laptop and gaining personalised recommendations based on the latest reviews, real-time discounts, and stock availability. That’s the difference RAG is making in retail.

RAG in Enterprise AI

Enterprise RAG cuts through outdated knowledge bottlenecks by letting AI pull real-time information directly from company systems.

Enterprise RAG gives AI direct access to internal systems, so information isn’t frozen in time. Some examples include updating policy as company rules change, complying with the latest regulations, and adjusting product details to inventory and pricing.

Instead of repeating pre-written answers, RAG-equipped AI delivers responses that match the business as it is today, not months ago.

The Challenges: Where RAG Still Falls Short

For all its promise, RAG isn’t a perfect solution. Its reliance on retrieval introduces new technical, ethical, and logistical obstacles that AI teams are still working to overcome.

Data Quality & Source Reliability

RAG improves AI accuracy by pulling external data, but what happens when the information retrieved is incorrect, biased, or outdated?

A well-documented concern is “hallucination with citations,” in which AI confidently generates a response with a footnote, only to find that the cited source is outdated or misleading. This is particularly dangerous in healthcare, legal, and financial applications, where incorrect information can have severe, lasting consequences.

Computational Cost

Traditional LLMs operate on pre-trained knowledge, making responses fast. RAG, on the other hand, introduces a few extra retrieval steps, which ramps up compute demands. Running RAG at scale requires technologies such as:

- High-performance vector databases (e.g., Pinecone, FAISS, Weaviate)

- Optimised retrieval pipelines for minimising latency

- Advanced GPU acceleration

While this cost is manageable for larger companies or those with skilled IT teams, smaller enterprises can struggle to scale their own RAG-based AI solutions efficiently.

Latency vs. Accuracy

Retrieval adds an extra step to the AI inference process. Thus, responses will take longer compared to purely generative models. This can be an issue for low-latency environments – such as customer support chatbots or financial trading bots.

Developers are now experimenting with hybrid RAG techniques that pre-cache relevant information or rank retrieved documents before feeding them into LLMs. But for now, there’s an inherent trade-off between speed and accuracy.

Privacy and Security Risks

RAG’s ability to pull from external sources raises serious data security and compliance concerns. If an AI assistant retrieves confidential company information or proprietary research, how can businesses ensure that data isn’t exposed to unintended users?

This is particularly critical for:

- Regulated industries (finance, healthcare, law) that must comply with data privacy laws (e.g., GDPR, HIPAA).

- Enterprise applications where internal knowledge bases contain sensitive information.

Some companies are deploying on-premise RAG systems to avoid external data leaks, but managing retrieval permissions remains a pertinent challenge.

Despite these obstacles, RAG remains one of the most effective solutions for making AI more factual and reliable – and the next generation of RAG systems will only improve on its current limitations.

The Future of RAG Beyond 2025

As AI advances, RAG is evolving from simple text retrieval into multimodal, real-time, and autonomous knowledge integration. Key developments include:

- Multimodal Retrieval: Rather than focusing primarily on text, AI will retrieve images, videos, structured data, and live sensor inputs. For example, medical AI could analyse scans alongside patient records, financial AI could cross-reference market reports with real-time trading data, and industrial AI could integrate sensor readings for predictive maintenance.

- Real-Time Knowledge Graphs: Instead of relying on static databases, future RAG models will integrate with auto-updating knowledge graphs. This will allow legal AI to track real-time rulings, financial AI to adjust risk models based on market shifts, and customer support AI to instantly reflect product updates.

- Hybrid AI Architectures: Advanced systems will combine multiple AI techniques for smarter decision-making. Future models will blend pre-trained knowledge, fine-tuned domain adaptation, real-time retrieval, and reinforcement learning to create AI that doesn’t just generate responses but actively reasons and learns.

Collectively, these advancements will redefine RAG as more than a retrieval tool, enhancing AI’s accuracy, context, and decision-making as it becomes more complex and multi-modal.

RAG Is Essential to AI’s Future

RAG is undoubtedly a core AI technology with a long future ahead of itself. Right now, its primary role is equipping AI with real-time, reliable information, but its potential goes far beyond that.

The next generation of AI will integrate multimodal retrieval, real-time knowledge graphs, and hybrid architectures, combining retrieval with reasoning and adaptability.

AI that can’t access dynamic, reliable information will struggle to stay relevant.

This is where Aya Data comes in. We help businesses implement cutting-edge RAG solutions, ensuring AI systems are accurate, scalable, and ready for the future.

Our services include:

- RAG System Design & Implementation: Building scalable retrieval pipelines that integrate with structured and unstructured data sources.

- Enterprise Knowledge Base Structuring: Organising internal documents, databases, and proprietary data for AI-driven retrieval.

- Vector Database Integration: Implementing optimised vector search for fast and relevant information retrieval.

- Custom AI & LLM Integration: Enhancing AI models with domain-specific knowledge retrieval for finance, healthcare, legal, and enterprise applications.

- Scalable Deployment & Optimisation: Ensuring RAG-powered AI runs efficiently, whether on cloud, on-premise, or hybrid infrastructure.

Our expertise helps businesses and organisations move beyond static AI models to build intelligent systems that retrieve, verify, and generate context-aware insights. Want to implement RAG for your business? Contact Aya Data to get started.