Audio transcription is the process of converting unstructured audio data, such as recordings of human speech, into structured data.

Artificial intelligence (AI) and machine learning (ML) algorithms require structured data to perform various tasks involving human speech, including speech recognition, sentiment analysis, and speaker identification. In short, audio transcription is fundamental for teaching computers to understand spoken language.

Prior to around 2015, audio transcription was entirely manual. You’d sit and listen to an audio recording and transcribe it manually by typing it out.

Today, computerized automatic speech recognition (ASR) models have streamlined the process of transcribing audio, enabling generations of AIs that understand spoken language. If you have an Alexa or equivalent, then you interact with ASR processes.

This article will explore audio transcription and its relationship with AI and data labeling.

Why is Audio Transcription Useful?

Audio transcription has many practical uses, including in technologies such as Alexa, Google Assistant and thousands of other AIs that use speech recognition.

It’s worth mentioning that there are two broad forms of audio transcription – speech-to-text (STT) and text-to-speech (TTS).

STT converts verbal language, i.e., speech, into text, whereas TTS converts text back into speech. One is broadly analogous to listening (e.g., a machine listens to and understands your speech), and the other is broadly analogous to speaking (e.g., a machine speaks to you in natural language).

Here are some practical uses of audio transcription:

1: Accessibility

- Closed captions and subtitles: Transcriptions can be used to create closed captions for videos, making them accessible to deaf or hard-of-hearing individuals. They also serve as subtitles for those who want to watch videos in a different language or in noisy environments. The process of generating sub titles used to be manual, but is now entirely automated.

- Transcripts for audio content: Audio content, such as podcasts and radio programs, can be made accessible to individuals with hearing impairments by providing written transcripts. The same can be said in reverse, to convert written content into audio for those with visual impairments.

2: Education and Training

- Lecture and seminar transcripts: Automatic text transcriptions of lectures and seminars can be recorded provided to students so they have a written copy. Written content is easier to search and revise from.

- Language learning: Language learners can use transcriptions to practice listening and reading skills. Having both audio and written formats of information is helpful in many educational situations.

3: Media and Journalism

- Interview transcripts: Journalists can transcribe interviews for later reference, to provide accurate quotes, etc.

- Documentaries and film production: Transcriptions aid in the editing process, as it’s easier to search for written text than speech. For example, producers can search their dialogue via keywords once transcribed.

4: Business and Legal

- Meeting and conference call transcriptions: Transcriptions of business meetings, conference calls, or webinars can be used for record-keeping, facilitating communication among team members, and creating actionable summaries.

- Legal transcriptions: Similarly, court proceedings and legal interviews often require accurate transcriptions for regulatory compliance, official records and case analysis.

5: Research and Academia

- Qualitative research: Researchers can use transcriptions to analyze interviews, focus groups, and recordings for social and thematic research.

- Speech and language research: Audio transcription is essential in studying speech patterns, linguistic phenomena, and the development of speech recognition technologies. This is especially true for building reliable multilingual speech recognition technologies.

6: Medical and Healthcare

- Medical transcription: Accurate transcriptions of doctors’ notes, patient interviews, and medical procedures are critical for maintaining comprehensive and organized patient records. Audio transcription is used extensively in healthcare.

- Psychological assessments and therapy sessions: Transcriptions of therapy sessions and psychological evaluations can help clinicians track progress.

7: Search Engine Optimization (SEO) and Marketing

- Video SEO: Adding transcriptions to video content is a well-known strategy for improving SEO and search engine visibility, making content more discoverable and increasing its potential reach.

- Content repurposing: Transcriptions can be swiftly repurposed into various formats, such as blog posts, social media content, or eBooks, expanding the content’s reach and impact.

8: Technology

- ASR: As mentioned, automatic speech recognition (ASR) enables a whole host of new technologies that can recognize human voices. These range from consumer devices like Alexa to smart home devices and medical devices designed for those with disabilities.

- Robotics: Building robots that move, think and speak like humans is the magnum opus of AI. Audio transcription is vital for teaching AIs about speech and other organic and environmental noises.

So, how do you actually go about transcribing audio?

Tools and Technologies for Audio Transcription

1: Manual Transcription

Manual transcription involves human transcribers listening to audio recordings and typing the content. Until around 2015, manual transcription was the only way to transcribe spoken language into text reliably. It was a massive industry.

While manual transcription methods can produce highly accurate results, it’s time-consuming and expensive, making it non-feasible for large-scale projects. The computational need for audio data to build machine learning models eventually outgrew the capacity of manual transcription services.

2: Automatic Speech Recognition (ASR)

ASR technology employs machine learning algorithms to transcribe audio data automatically.

ASR systems have made significant progress in recent years, with models like DeepSpeech and Wav2Vec offering impressive out-of-the-box performance. In addition, there are now many open-source Python tools and libraries for ASR.

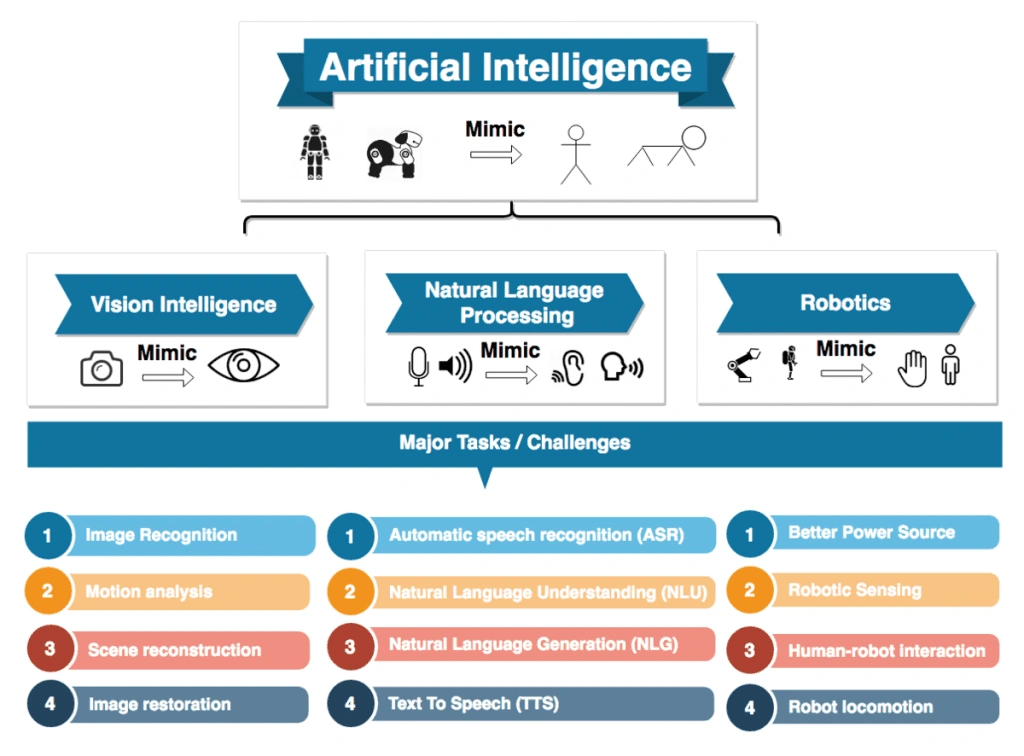

Since it interacts with natural language, ASR falls into the natural language processing (NLP) subsection of AI, as below.

ASR falls into the NLP umbrella of AI

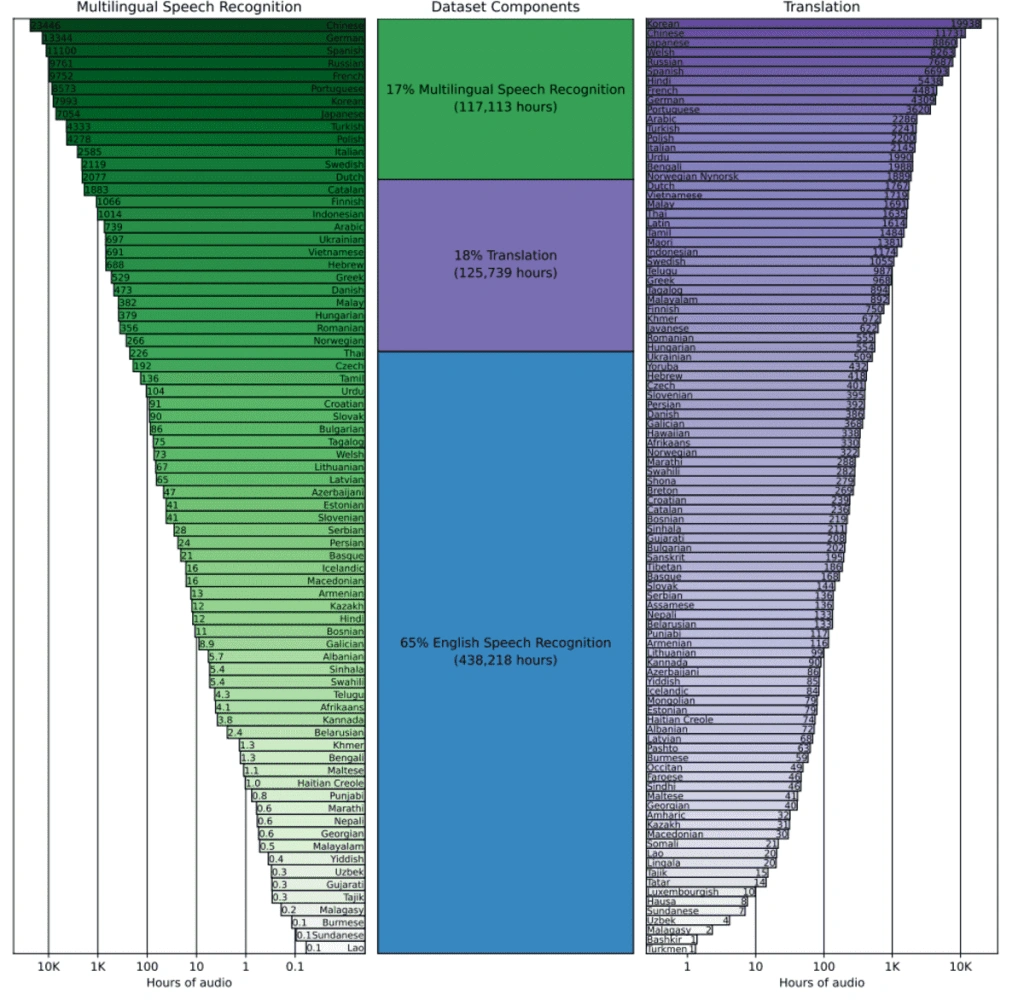

As ASR has developed, most of the main players in AI like Google, Amazon and OpenAI have staked their claims, including OpenAI’s Whisper, trained on 680,000 hours of multilingual and multitask supervised data.

While ASR has evolved to enable a whole host of advanced technologies like virtual assistants such as Alexa, there remain significant challenges in dealing with background noise, accents, slang, localized vernacular, and specialized vocabulary. Moreover, these models show maximum effectiveness for native English speakers and remain somewhat limited for multilingual use.

There are concerns that speech recognition technologies are biased, prejudiced, and inequitable.

Read our article Speech Recognition: Opportunities and Challenges.

How ASR Systems Are Built

ASR primarily sits within the supervised learning branch of machine learning. That means models are trained on training data, which teaches the model how to behave when exposed to new, unseen data. Read our Ultimate Guide for a detailed account of supervised machine learning.

To train ASR systems, vast amounts of labeled audio data are required. For example, Whisper is trained on 680,000 hours, or 77 years of continuous audio. You can see below how the vast majority of data was English, though they have included a vast range of languages in the training set.

The training process generally involves the following steps:

- Data Collection: A diverse dataset of audio samples covering a wide range of speakers, accents, and background noise conditions, is collected. You might retrieve audio from internal sources (e.g. a call center’s database) or external/public sources. It depends on the model’s intent. For example, the model may only need to recognize a small set of words in some situations.

- Data Preprocessing: Audio samples are segmented, cleaned, and normalized to ensure quality and simplify labeling.

- Data Labeling: Accurate transcriptions are created for each audio sample, serving as the “ground truth” for the ASR system to learn from. It’s essential for these “gold sets” to be as high-quality as possible, which is what specialist data teams like Aya Data help guarantee.

- Model Training: ASR models, often based on deep learning architectures like Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs), are trained on the labeled dataset to learn the mapping functions between audio features and text transcriptions. This involves supervised machine learning.

- Model Evaluation and Fine-tuning: The ASR model is evaluated on a separate set of labeled audio data. Once training is complete Its performance is assessed using metrics like Word Error Rate (WER). Finally, the model is optimized.

Example of audio annotation

Competent data labeling plays a crucial role in training ASR systems, as high-quality transcriptions enable the model to learn more effectively and produce considerably more accurate speech results. Moreover, human-in-the-loop data labeling teams can pick up on issues in the training data and flag them prior to training.

The Role of Data Labeling in Speech Recognition

Data labeling is a critical aspect of training speech recognition algorithms. The quality of a) the data and b) the labels directly impact the ASR system’s performance.

Some key points to consider when labeling data for speech recognition include:

- Accuracy: Precise transcriptions are essential for training reliable ASR models. Errors in the ground truth labels can lead to inaccuracies in the trained model’s predictions.

- Consistency: Maintaining consistent labeling standards across the entire dataset is crucial for minimizing inconsistencies and potential biases in the machine learning model.

- Granularity: Depending on the specific application, it might be necessary to include additional information in the labeled data, such as speaker identification, emotion, or prosody. Not all models require these labels.

- Quality Control: Quality control ensures the dataset’s consistency and accuracy. This may involve spot-checking transcriptions and using automated tools to identify errors.

By prioritizing accurate and consistent data labeling, data scientists have a much better chance of training reliable ASR systems. While unsupervised techniques and reinforcement learning have both been applied to building ASR systems, supervised machine learning is the staple technique, thus necessitating data labeling.

Algorithms and Models in ASR

Speech recognition algorithms have evolved, and many of the latest models are built using neural networking architecture and techniques.

Here’s a technical overview of modern ASR models.

1. Deep Neural Networks (DNNs)

Deep Neural Networks (DNNs) are feedforward networks composed of multiple layers of interconnected artificial neurons.

For ASR, these networks can learn complex, non-linear relationships between input features and output labels, which is crucial when dealing with enormous, complex datasets.

2. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a specialized type of DNN that exploit the local structure of the input data, making them well-suited for tasks involving images or time-series data.

3. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed to model sequential data, making them ideal for speech recognition tasks.

One of the most popular RNN architectures used in ASR is the Long Short-Term Memory (LSTM) network.

4. Transformer Models

Transformer models have shown remarkable success in various NLP tasks, including ASR.

These models rely on the self-attention mechanism, which allows them to capture dependencies across input sequences without relying on recurrent connections. OpenAI’s Whisper is a transformer model.

Audio Transcription Example: Amazon Alexa

There are few ASR technologies as influential as Amazon Alexa, but how does it actually work?

As you might imagine, technologies like Alexa involve various machine learning algorithms and deep learning architectures. Some of the key technologies and techniques used in the Alexa system include:

- Automatic Speech Recognition (ASR): ASR converts the user’s spoken words into text. Alexa uses a combination of deep learning techniques, such as Deep Neural Networks (DNNs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs, particularly LSTMs), to build its ASR system. This is because Alexa and other voice assistants need to understand a huge range of vocabulary to work. They also work in multiple languages. As of 2023, Amazon Alexa speaks English, Spanish, French, German, Italian, Hindi, Japanese, and Portuguese. It’s also capable of Spanish, Mexican, and American dialects for Spanish and French and Canadian dialects for French.

- Natural Language Understanding (NLU): Similar to NLP, NLU is responsible for extracting meaning and intent from the text generated by the ASR system. Alexa employs deep learning models to understand the context of the user’s query, extract relevant information, and identify the user’s intent.

- Dialogue Management: Dialogue management maintains the flow of conversation between the user and Alexa. This uses reinforcement learning and advanced sequence-to-sequence models.

- Text-to-Speech Synthesis (TTS): Finally, TTS converts Alexa’s response into natural-sounding speech that the user can understand.

Summary: What Is Audio Transcription and How Does It Relate to Data Labeling?

Audio transcription is the process of converting spoken language in audio or video recordings into written text. It plays a vital role in various industries, including those we interact with on a near-daily basis.

Accurate labeled transcriptions provide the necessary information for developing and improving AI-driven speech recognition systems. Only then can we create more accurate and efficient AI models, improve accessibility, and optimize audio content analysis across numerous industries and sectors without bias or prejudice.

To launch your next audio transcription project, contact Aya Data for your data labeling needs.

Audio Transcription FAQ

What is audio data labeling?

Audio data labeling involves annotating audio files with relevant information, such as transcriptions, speaker identification, or other metadata. Labeled audio data enables the supervised training of machine learning models.

What is the best way to transcribe audio to text?

The best way to transcribe audio to text depends on the specific requirements, budget, and required accuracy. Options include manual transcription, where a human transcriber listens to the audio and types out the content, or automated transcription, where AI transcription services convert spoken language into text using speech recognition algorithms.

What are some of the different types of audio transcription, and when are they used?

There are three broad approaches. The first is verbatim transcription, which captures every spoken word, including filler words (e.g., “um,” “uh”), false starts, and repetitions. This is commonly used in legal settings, research, and psychological assessments.

In the second type, edited transcription, filler words, stutters, and repetitions are omitted, providing a clean and easy-to-read transcript.

In the third type, intelligent verbatim transcription, grammar, and syntax are refined to improve readability.