Named entity recognition (NER) is a vital subfield of natural language processing (NLP).

In short, NER aims to identify and extract named entities, such as people, organizations, locations, and other specific details, from unstructured text data.

NER is widely used to retrieve specific information from text at scale, for question-answering systems, sentiment analysis, machine translation, and other domains within NLP.

This article will discuss the main concepts and techniques used in NER, including the different types of named entities, approaches to NER, and how it relates to machine learning (ML).

Types of Named Entities

Named entities are words or phrases in a text that refer to specific people, places, organizations, products, dates, times, and other named objects.

NER involves recognizing and classifying these entities into their respective categories.

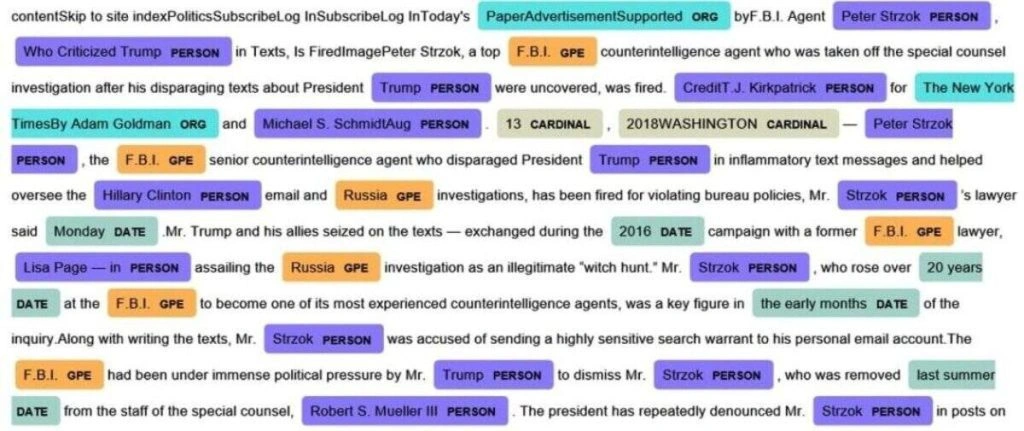

Example of NER labels

It’s a relatively simple concept, but entities can be ambiguous, especially in complex texts, texts transcribed from audio, or historical texts written hundreds or even thousands of years ago.

Plus, it’d be nigh-impossible to manually extract this sort of information from huge volumes of data. ML has transformed the methods available for extracting named entities from vast quantities of unstructured text data.

Here are the core types of named entities:

Person

Person entities refer to the names of individuals, such as John Smith, Jane Doe, or Barack Obama.

Due to capitalization rules and the context surrounding the person, people are typically straightforward to classify without sophisticated techniques.

Organization

Organization entities refer to the names of companies, institutions, or other formal groups, such as Microsoft, Harvard University, the WHO, or the United Nations, etc.

Location

Location entities refer to the names of places, such as cities, countries, regions, or landmarks, such as New York, Lebanon, the Sahara Desert, or the Arc Du Triomphe.

Date and Time

Date and time entities refer to specific dates, times, or temporal expressions, such as January 1st, 2022, 10:30 AM, or simply “yesterday.”

Product

Product entities include the names of goods or services, such as iPhone, Coca-Cola, or Amazon Prime.

Miscellaneous

Miscellaneous entities refer to other named objects that do not belong to the above categories, such as abbreviations, acronyms, or symbols, such as NASA, DNA, or $.

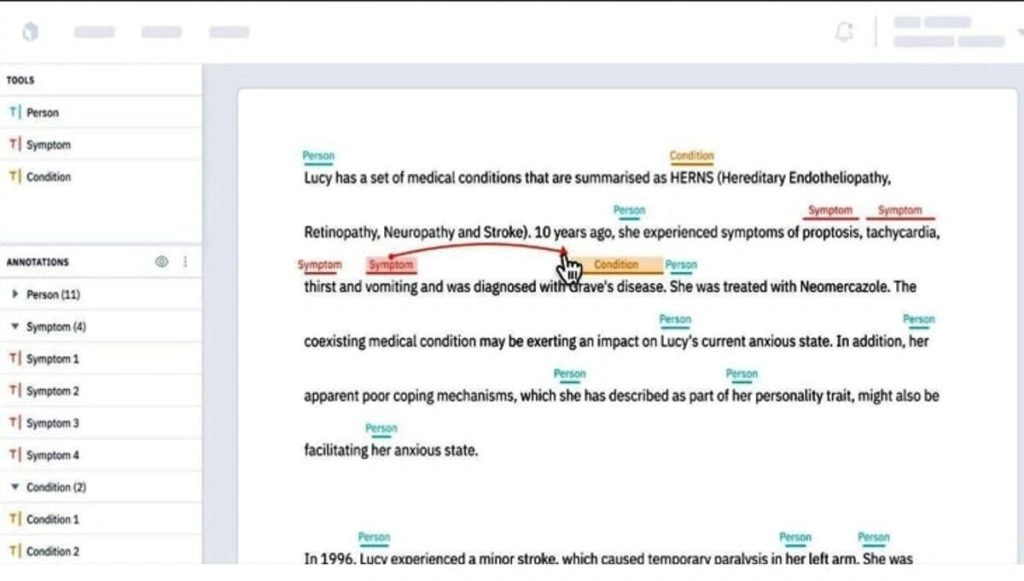

If the workflow or application demands it, practically anything can be pre-defined as an entity, such as the symptoms of illnesses, chemicals, components, scientific or academic terminology, specific objects, dosages, etc.

Approaches to Named Entity Recognition

There are three main ways of extracting entities from text.

1: Rule-Based

Rule-based NER involves creating a set of predefined rules and patterns to extract named entities from text data.

This approach relies on linguistic rules and regular expressions to identify specific patterns in the text that match the predefined categories.

For example, a rule-based NER system could identify any word starting with a capital letter – that’s relatively straightforward.

The advantage of rule-based NER is that it is relatively simple to implement and works well for well-structured texts with few ambiguous entities. However, it’s somewhat limited for large quantities of complex, natural text data.

2: Statistical Machine Learning

Statistical NER uses machine learning algorithms to learn from labeled data and predict the named entities in unseen text data.

This approach relies on statistical models, such as:

- Hidden Markov Models (HMM)

- Maximum Entropy Models (MEM)

- Conditional Random Fields (CRF)

Statistical NER can handle complex patterns and variations in text but requires a significant amount of labeled data and feature engineering to train the models.

In addition, these techniques are relatively domain-specific, i.e., models may not be immediately transferable between different contexts.

3: Deep Learning

Deep learning NER involves using deep neural networks to learn from labeled data and predict the named entities in unseen text data.

This depends on Recurrent Neural Networks (RNN), Convolutional Neural Networks (CNN), or Transformers to encode the text and extract relevant features for the task.

Deep learning models have shown state-of-the-art performance in various NLP tasks, including NER, due to their ability to capture complex relationships and patterns in the data.

The advantage of deep learning NER is that it can handle complex patterns and variations in the text and can learn from unstructured data without explicit feature engineering. This enables much greater general performance compared to classical ML techniques.

Deep learning supports highly sophisticated models like GPT-3 and GPT-4 – which need little introduction.

However, like any form of deep learning, this requires a significant amount of labeled data and is computationally expensive.

Plus, there are still persistent issues involving bias and prejudices inflicted on the model by the training data.

Uses and Applications of NER

NER is integral to building advanced NLP models that understand named entities and the relationships between them.

Here are some of the primary uses of NER:

Information Extraction

NER extracts relevant information from unstructured text data, such as articles, emails, social media posts, web pages, or virtually anything else.

With NER, it’s possible to automatically identify people, organizations, locations, dates, and times. This has a myriad of uses, from non-profit academic research to competitor intelligence for marketing purposes.

For example, this study published in the Nature Public Health Emergency Collection highlights how NER assists researchers in analyzing vast quantities of papers and medical records at scale.

Practically any informational NLP task that involves the extraction and analysis of named entities relies on consistent and accurate NER. This has revolutionized academic research, making the process of analyzing vast quantities of papers considerably more efficient.

Chatbots and Virtual Assistants

NER can be used in question-answering systems to extract the relevant information required to answer a specific question.

For example, given the question, “Who is the CEO of Apple?” NER can identify “Apple” as an organization and “CEO” as a title, which is vital for retrieving the answer.

If a user asks a virtual assistant to book a flight, NER can identify the relevant named entities, such as departure location, destination location, and travel dates, which can help in booking the correct flight.

This is vital for both chatbots and large language models such as GPT.

Sentiment Analysis

NER can be used in sentiment analysis. Entities link with sentiments to associate those entities with positive, negative or neutral sentiments.

For example, suppose a social media post mentions a product or a brand.

In that case, NER can identify the sentiment associated with the named entity to determine whether the post is positive, negative, or neutral.

The brand can perform sentiment analysis on vast quantities of unstructured text to accurately map attitudes towards their brand and product. This also assists in competitor intelligence – brands can analyze sentiments to competitor products to unlock strategic advantages.

Machine Translation

NER can be used in machine translation to improve translation accuracy by identifying named entities and translating them correctly.

For example, if a sentence contains a named entity, such as a person or an organization, NER recognizes the entity and translates the sentence appropriately.

Intelligence and Fraud Detection

Intelligence agencies use NER to trawl through colossal amounts of open data to identify the names of threat actors and associated organizations.

NER can be used in fraud detection to identify named entities associated with fraudulent activities, such as names of fraudsters, fake organizations, and suspicious locations.

Linking entities to other topics and uncovering sentiments enables open-source intelligence at scale.

This 2016 book, Automating Open Source Intelligence, cites NER as a vital tool for uncovering intelligence and security insights in internet data.

Challenges of NER

Like all AIs, NLP models utilized for NER are prone to inaccuracy, especially when dealing with edge cases or high-specificity data.

Here are some of the principal challenges associated with NER:

1: Ambiguity: Named entities can be ambiguous, meaning they can have multiple meanings or belong to multiple categories. For example, the word “Apple” can refer to the fruit or technology company, depending on the context. This ambiguity makes it difficult for NER models to classify entities without sufficient contextual information. This is exacerbated by thin, short-hand text, which may not carry enough context, such as a tweet or social media status.

2: Domain specificity: NER models trained on general-domain data may perform poorly when applied to domain-specific or technical text. Domain-specific entities, jargon, or terminologies can differ significantly from those found in general text. NER for highly specific applications, e.g., medical analysis, will likely need to be tuned and optimized.

3: Language variation: NER models can struggle with recognizing entities in languages or dialects that are substantially different from the training data. Variations in spelling, grammar, or vocabulary can lead to inaccuracies in entity recognition. Language localization (e.g., differences between different versions of the same language, such as US English and UK English) also complicates NER and other NLP tasks.

4: Cultural and Linguistic Differences: Identifying entities depends on the entity itself and the surrounding text, i.e., the context. This differs between languages, e.g., some languages feature highly unique syntactic structures. NER models remain relatively limited to the English language, as this is where most of the training data is available.

5: Rare or unseen entities: Models might not correctly recognize entities not present or underrepresented in the training data. Since machine learning models rely on patterns and contextual cues learned during training, recognizing rare or unseen entities can be challenging, leading to lower accuracy for such entities.

6: Long-range dependencies: Some named entities may have long-range dependencies within the text. For example, an entity may only be identifiable using text mentioned in prior passages. Until recurrent neural networks (RNNs), it was virtually impossible to identify entities if their dependencies didn’t appear within the same sequence of words as the entity itself.

Worked Example of NER

Suppose we have this statement:

“Elon Musk is the CEO of SpaceX, which was founded in 2002 and is headquartered in Hawthorne, California.

To extract named entities from this text using ML, you could use a pre-trained NER model such as spaCy. This model is built on a deep learning architecture and has been trained on a large corpus of text, enabling it to recognize various named entity types, which we’ve described above.

The model processes the text, tokenizes it, and then identifies and classifies the named entities it predicts.

In the above example, the model would recognize and classify the following named entities:

- “Elon Musk” as a person (PER)

- “SpaceX” as an organization (ORG)

- “2002” as a date (DATE)

- “Hawthorne” as a location (LOC)

- “California” as a location (LOC)

While extracting such entities from a short statement is simple, NER operates at vast scales.

For example, you could analyze thousands of social media webpages, media content from online newspapers, historical articles, etc.

How NER Models Predict Unknown Entities

So, what if the model encounters entities that aren’t present in its training data?

In this case, the model uses the context of the language to predict the presence and type of an entity.

Models can often generalize well to new entities, as they have learned the patterns and contextual clues required to predict the presence of entities and what entity it’s likely to be.

For example, if the model encounters a new company name, it might still recognize it as an organization if the context surrounding the name resembles patterns it has seen during training.

Phrases like “founded in,” “headquartered in,” or “CEO of” can provide contextual clues that help the model infer the correct entity type, even if the specific entity is not present in the training data.

However, NER models, like any machine learning models, are imperfect and can make mistakes when encountering unseen entities or ambiguous contexts.

Popular NER Tools and Libraries

There are various NER tools and libraries available that provide pre-trained models and APIs for NER tasks.

Some of the popular NER tools and libraries are:

NLTK

NLTK

The Natural Language Toolkit (NLTK) is a widely used Python library for NLP tasks, including NER. NLTK provides various modules and algorithms for tokenization, POS tagging, chunking, and NER.

NLTK’s NER module uses a statistical model based on Maximum Entropy Markov Models (MEMM) to extract named entities from text data.

SpaCy

SpaCy

SpaCy is a Python library for industrial-strength NLP tasks, including NER. SpaCy provides pre-trained models for multiple languages and domains and customizable pipelines for tokenization, POS tagging, dependency parsing, and NER.

SpaCy’s NER models use deep learning architectures based on CNN and Transformer models to extract named entities from text data.

AllenNLP

Allen NLP

AllenNLP is a Python library for deep learning NLP tasks, including NER. AllenNLP provides pre-trained models and customizable pipelines for tokenization, POS tagging, dependency parsing, and NER.

AllenNLP’s NER models use deep learning architectures based on Transformer models to extract named entities from text data.

Summary: What Is Named Entity Recognition in NLP?

NER is a critical subfield of NLP that involves identifying and extracting named entities from unstructured text data.

By processing and understanding entities, software systems gain knowledge meaning and context of names, whether that’s a person’s name, an organization, or a date or time.

NER can be achieved using rule-based, statistical, or deep learning methods, with the latter two methods involving machine learning.

Deep learning NER helps predict entities even when the language or context is unclear or ambiguous.

Named Entity Recognition FAQ

What is Named Entity Recognition, and why is it important in NLP?

Named entity recognition (NER) involves identifying and classifying named entities from unstructured text, such as names of people, organizations, locations, dates, and other relevant categories.

NER is essential in NLP as it helps extract valuable information from text, enabling various applications such as information extraction, document indexing, and question-answering systems.

How does NER differ from other NLP techniques, such as sentiment analysis or topic modeling?

NER focuses on extracting and classifying named entities from text, sentiment analysis aims to determine the sentiment or emotion expressed in the text. Topic modeling seeks to discover the underlying topics or themes in a collection of documents.

How can machine learning be used to improve the accuracy of NER?

Machine learning can improve the accuracy of NER models by capturing complex patterns and contextual information in the text, which rule-based approaches may not easily capture.

Machine learning models, particularly deep learning models, can learn to recognize entities based on context, even if the specific entity was not present in the training data.

What is NER in NLP using NLTK?

NER or Named Entity Recognition, in NLP using NLTK (Natural Language Toolkit), is the process of identifying and classifying named entities present in text into predefined categories like person, organization, or location.

Which model is best for named entity recognition?

BERT (Bidirectional Encoder Representations from Transformers) has proven to be highly effective for Named Entity Recognition due to its contextual understanding of languages.

What is the difference between BERT and NER?

BERT is a language model for understanding context in text, whereas NER is a specific task within NLP where the goal is to identify and classify named entities. BERT can be used to enhance NER performance.

What is POS and NER in NLP?

POS (Part of Speech) tagging identifies the grammatical group of a word (e.g., noun, verb), while NER (Named Entity Recognition) identifies and classifies proper nouns and entities in text. Both are fundamental tasks in NLP for understanding language structure and content.

Do You Need to Train Your Performant Language Models?

AyaData can help your AI to better understand and process human language. We have an NLP team that is fluent in English, French, and over 10 African dialects. More importantly, we have a deep understanding of context and sentiment and can help train your language model to do the same.

If you are interested in the work we’ve done for organizations from the US to Ghana, take a look at our case studies. In case you have any questions about how our work can help your company, feel free to contact us.