Video annotation is the process of labeling or tagging objects, events, or actions within video footage so that artificial intelligence (AI) models can learn to recognise and interpret them. It transforms raw, unstructured video data into structured, machine-readable datasets that power computer vision systems.

Unlike static image annotation, video annotation involves frame-by-frame labeling and sometimes tracking objects across sequences, which allows AI models to understand not only what is in a scene, but also how things move, interact, and change over time.

Video annotation has emerged as a critical component for developing AI applications that understand and respond to visual data in motion.

In this article, we will explore the concept of video annotation, its applications, and the challenges of this complex form of data annotation.

What is Video Annotation?

Video annotation is the process of labeling or tagging specific objects, activities, or events within video frames to provide meaning to raw visual motion data.

By providing supervised models with annotated data, they can learn from the labeled data, recognize patterns, and make predictions when encountering new, unannotated video data.

It plays the same role as data annotation for other computer vision (CV) tasks.

How It Works

- Frame-by-frame labeling: Every frame of the video is annotated with bounding boxes, polygons, or segmentation masks.

- Object tracking: Objects are consistently labeled across frames so the model learns motion and trajectory.

- Temporal annotation: Specific events or actions (e.g., a pedestrian crossing or machinery operating) are marked across time.

- Attributes & context: Additional metadata (e.g., object speed, direction, or behavior) can be tagged for richer model training

Here are the applications of video annotation and the types of models and use cases it supports:

Applications of Video Annotation in AI

Video annotation plays a crucial role in training AI models for a vast range of applications across multiple sectors and industries:

- Autonomous Vehicles: Video annotation helps train self-driving car algorithms to recognize and track objects, such as pedestrians, cyclists, and other vehicles, in real-time. Accurate annotations are vital for ensuring the safety and reliability of autonomous vehicles.

- Surveillance and Security: Annotated video data enables AI-powered security systems to detect and track potential threats, monitor crowd behavior, and identify unusual activities. They can do this automatically based on information learned from the training set.

- Healthcare: Video annotation in the medical field can assist in analyzing patient movements, enabling AI models to identify symptoms of conditions such as Parkinson’s disease or assist in rehabilitation. There are several AIs designed to analyze early signs of neurodegenerative disease by tracking movement.

- Sports Analytics: AI models trained on annotated video data can analyze player performance, identify areas for improvement, and provide real-time feedback to analytics companies, coaches and sports science researchers.

- Media and Entertainment: AI-driven video analysis can automatically generate subtitles, detect specifically defined content, or identify key scenes in movies and TV shows.

Video annotation is inherently complex as video data has many frames, so it’s similar to image annotation but vastly more intensive.

Here are some of the challenges of video annotation:

Challenges in Video Annotation

Video annotation is complex and presents many challenges for the annotation task itself:

- Complexity: Video data is inherently complex due to the vast number of frames, objects, and actions that need annotation. The sheer volume of data makes manual annotation time-consuming and expensive.

- Consistency and Quality: Ensuring consistent and accurate annotations across different frames and annotators can be challenging. Inaccurate or inconsistent annotations can negatively impact the performance of AI models.

- Data Privacy: Annotating video data often involves handling sensitive information, such as faces or personal identifiers. Data privacy and compliance with regulations, such as GDPR, is crucial during the annotation process.

- Scalability: As AI models become more sophisticated and require larger datasets, efficient and scalable video annotation solutions are becoming increasingly important.

Primarily, video annotation is labor-intensive. For example, labeling complex, long videos requires thousands or even millions of labels. Plus, the data is storage-hungry, which drains the resources of data centers.

Types of ML Models Used in Video Tasks

There are many ML models. Here are 5 of the most popular and widely used:

- 3D Convolutional Neural Networks (3D-CNNs): This is like a normal neural network, but it works in 3D. It looks at videos in chunks, not just single pictures, to understand the action across multiple frames. 3D-CNNs are great for recognizing actions in videos.

- Convolutional LSTM (ConvLSTM): This method combines the strength of two types of neural networks to understand videos. One (CNN) is great at understanding single pictures, while the other (LSTM) is good at understanding sequences. This makes it effective at recognizing activities in videos.

- Two-Stream Convolutional Networks: This method uses two different neural networks. One looks at the details in single pictures, and the other looks at the movement between shots. They work together to understand what’s in a video and how it moves.

- I3D (Inflated 3D ConvNet): This is a more advanced version of the 3D-CNN. It essentially inflates a 2D-CNN into a 3D one, allowing it to understand the details in single pictures and the action across multiple frames. This is excellent for classifying videos and recognizing sequences of actions.

- Temporal Segment Networks (TSN): This method breaks a video into equal parts and looks at a single frame or a short clip from each region. These parts are understood separately and then brought together for the final understanding. It’s suitable for recognizing actions and classifying videos.

How To Label Video Data

So, how do you actually go about labeling video data?

Data Preparation

The first step involves selecting and preparing the video dataset that will be annotated.

This includes determining the length of the video, selecting relevant video clips or sequences, and deciding on the annotation techniques to be used based on the specific goals of the AI project.

Frame Extraction

Video data consists of a series of individual frames (images) displayed at a certain frame rate.

Before annotation, the video must be broken down into its constituent frames.

Depending on the requirements of the AI model, it may not be necessary to annotate every frame. Instead, annotators may work with keyframes containing significant changes or information compared to the preceding frame.

Annotation Techniques

Different annotation techniques can be applied to video frames depending on the desired output and the complexity of the data.

Common annotation techniques for video data include:

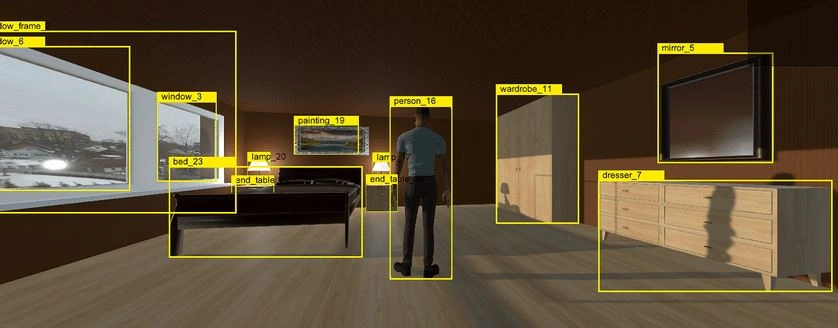

- Bounding boxes: Drawing rectangular boxes around objects of interest within a frame to identify and localize them. A simple form of data labeling.

- Polygonal segmentation: Creating polygons around objects to provide a more accurate representation of shape for complex shapes.

- Skeleton/structure/keypoint annotation: Identifying and labeling key points on a subject, like a human, including labels attached to joints or body parts, to track movement and posture.

- Temporal segmentation: Labeling specific time intervals or segments within a video to identify events or actions.

Manual or Automated Annotation

The actual labeling process can be done manually, where human annotators label the frames using annotation tools or through automated methods that employ computer vision algorithms to detect and label objects.

Often, a combination of manual and automated techniques is used to improve accuracy and efficiency. This is particularly important for large quantities of video data, which are exceptionally labor-intensive to label by hand.

Human annotators typically review and refine the automatically generated labels to ensure quality and consistency, acting as a human in the loop.

Quality Assurance

After the annotation process is complete, performing quality assurance checks to ensure the labels are accurate, consistent, and meet the project requirements is essential.

This step may involve reviewing a sample of the annotated data, checking for errors or inconsistencies, and providing feedback to annotators for subsequent label batches.

Data Aggregation

Once the labeling process is complete and quality checks have been performed, the annotated video data is aggregated and formatted for feeding into supervised ML models.

This may involve combining the annotated frames back into a video sequence or converting the annotations into a format that machine learning frameworks can easily ingest.

Examples of Video Annotation and the AIs It Supports

Video annotation is a critical aspect of training cutting-edge AI models.

It’s used for everything from training driverless vehicles to alternate and virtual reality and robotics, where robots are trained to understand complex sequences – an ability humans take for granted.

Here are a few interesting case studies where video annotation has been effectively used for real-life AI applications.

Autonomous Vehicles: Waymo

Waymo is one of the leading companies in the development of self-driving cars. Their AI system relies heavily on annotated video data to understand and navigate real-world driving scenarios.

In one of their projects, they used video annotation to label objects such as other vehicles, pedestrians, cyclists, and road signs in their training data.

This data was then used to train their AI models to recognize these objects in real-time while driving. The quality and accuracy of these video annotations are critical to the safety and reliability of their self-driving technology.

Sports Analytics: Second Spectrum

Second Spectrum is an AI company that uses machine learning and computer vision to provide advanced sports analytics.

They use video annotation to train their AI models to track players, understand game strategies, and provide real-time insights during games.

In a partnership with the National Basketball Association (NBA), Second Spectrum annotated thousands of hours of game footage, labeling player movements, ball possession, and game events.

The annotated data was used to train their AI system, which now provides real-time analytics during NBA games.

Security Systems: Deep Sentinel

Deep Sentinel is a company that offers AI-powered home security systems. They use video annotation to train their AI to detect potential security threats accurately.

In a case study shared by the company, they annotated various video feeds to label potential threat scenarios like break-ins, vandalism, or suspicious behavior.

The annotated videos were then used to train their AI system, which can accurately differentiate between non-threatening activities (like a cat wandering into the yard) and actual security threats.

This is just a minuscule selection of video annotation’s uses and applications with computer vision and AI/ML as a whole.

Summary: What is Video Annotation for AI?

Video annotation for AI refers to the process of labeling or tagging elements within video data. This can involve marking out objects, people, actions, or events frame by frame and assigning them relevant labels or categories. The goal is to create a rich dataset that can be used to train AI models, specifically in tasks like object detection, activity recognition, and video classification.

Video annotation is complex and supports a vast range of complex AI models across numerous industries and sectors.

Contact us to discuss how we can optimise your video annotation for machine learning for this years industrial demands. For more insights, explore our blog on video annotation service providers .

Frequently Asked Questions

How do you annotate video data for machine learning?

How do you annotate video data for machine learning?

Video data is annotated frame by frame using bounding boxes, polygons, semantic segmentation, or keypoints. Advanced tools also track objects across sequences to capture motion and context for training AI models.Why is video annotation important for machine learning?

It transforms raw video into structured datasets, helping AI models learn to detect objects, understand activities, and make predictions in dynamic environments like autonomous driving, robotics, and surveillance.

How is video annotation used in the security industry?

In security and surveillance industries, annotated video trains AI systems to detect suspicious activity, recognise unauthorised access, and monitor environments in real time.

What are generative AI video labeling services?

These services use generative AI tools to assist human annotators by automatically suggesting or pre-labeling video frames, reducing time and cost while maintaining accuracy.

How is video annotation applied in the insurance sector?

Insurance firms use annotated video for claims assessment (e.g., accident footage analysis) and risk monitoring, helping automate and improve decision-making.

What is video annotation for computer vision?

It is the foundation of computer vision systems, enabling machines to identify objects, track movements, and interpret events in video streams.

Can video annotation be applied in financial services?

Yes. In financial services, it is used for fraud detection, monitoring branch or ATM activity, and ensuring regulatory compliance through AI-driven video analysis.

What does “annotation in YouTube videos” mean?

This usually refers to adding notes, highlights, or interactive elements within YouTube videos,different from AI training, but still a form of annotation.